Today we are finishing our latest series by taking a look at the Supermicro SYS-210GP-DNR, a 2U, 2-node 6 GPU system that Patrick recently got some hands-on time with at Supermicro headquarters. This 2U server contains two server nodes, each of which is equipped with a single Intel Ice Lake CPU and up to three high-end GPUs. This provides a very GPU-dense setup utilizing more traditional PCIe GPUs than something like the Redstone system we looked at previously.

Video Version

This is part of our visit to the Supermicro HQ series, similar to our recent coverage of the Supermicro Hyper-E 2U, SYS-220GQ-TNAR+, and the Ice Lake BigTwin.

We are going to have more detail in this article, but want to provide the option to listen. As a quick note, Supermicro allowed us to film the video at HQ, provided the systems in their demo room, and helped with travel costs to go do this series. We did a whole series while there and are tagging this as sponsored. Patrick was able to pick the products we would look at and have editorial control of the pieces (nobody is reviewing these pieces outside of STH before they go live either.) In full transparency, this was the only way to get something like this done, including looking at a number of products in one shot, without going to a trade show.

Supermicro SYS-210GP-DNR Overview

It is time now to get into the details of this unique server offering.

This front of the SYS-210GP-DNR looks almost like a mirror of the Redstone server; four high-speed fan modules and a quartet of 2.5″ drive bays, just arranged on different sides:

These extremely high output fans are responsible for essentially all the cooling in the system, so the large hot-swap fan modules are to be expected. These modules are very similar, but not identical, to those in Supermicro’s 2U Redstone server. This makes sense given the two systems can have relatively similar power footprints.

To the right of those fan modules is a quartet of 2.5″ U.2 NVMe drive bays. The Redstone server was only equipped with SATA bays for storage, so the total storage available on this platform is slightly higher, but is still not an area of primary focus for a system like this. With that said, since this chassis is home to two independent servers, each node gets two of those 2.5″ NVMe bays allocated to it.

Moving around to the rear of the server starts to give us an idea of what makes this platform special.

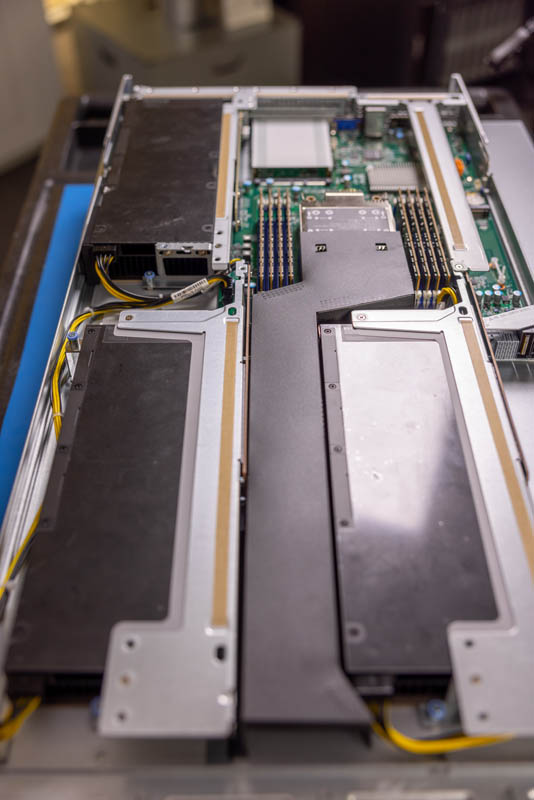

The rear of the SYS-210GP-DNR is home to two server modules, each of which occupies approximately 1U of the server.

For those wondering what makes this unique from standard 1U platforms, each of these nodes can be individually removed from the rear and function as independent servers. Cost savings in the context of comparing to 1U servers is significant because we have shared power supplies, fans, and chassis. One can also service the nodes more easily since they are fitted on trays.

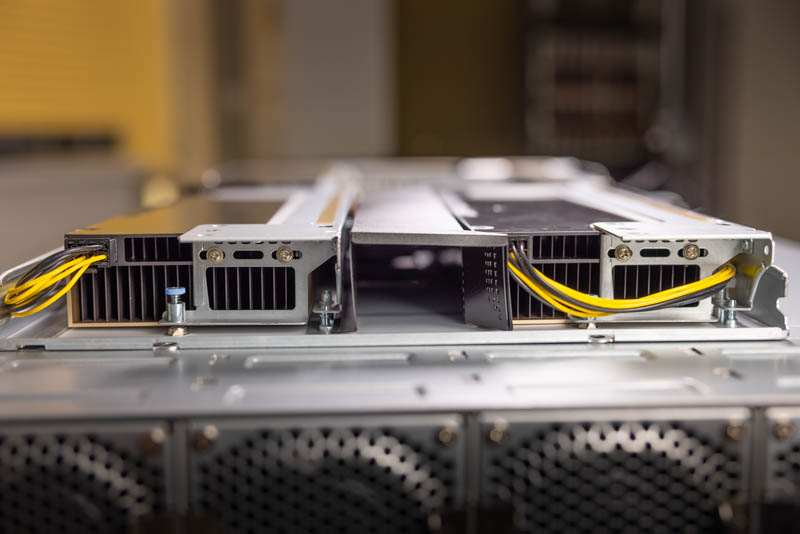

These power supplies are 2.6kW 80+ Titanium units that require 208V/240V power. Two CPUs and six GPUs can pull a lot of power, so these high wattage PSUs are to be expected. Thanks to more available horizontal space, these units are not quite as thin as we saw on the BigTwin 2U4N server.

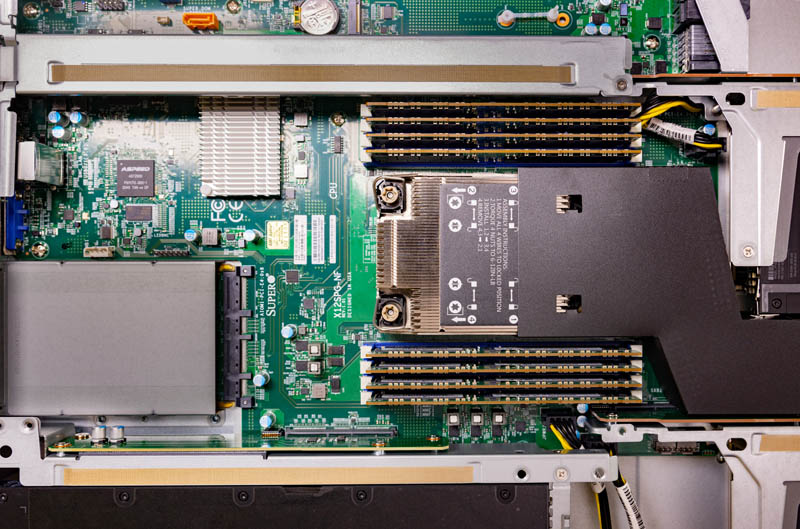

Each of the nodes on the SYS-210GP-DNR can support up to a single Intel 3rd Gen Xeon Scalable “Ice Lake” CPU at up to 40-cores and a 270W TDP. All 8 memory channels on the CPU are represented with the 8 memory slots.

The star of the show is the support for three full-height PCIe 4.0 x16 expansion slots. In our demo unit, these are populated by NVIDIA A100 cards, but this configuration could be modified for whatever type of PCIe GPUs or other PCIe attached accelerators that may be required. With two nodes each sporting three GPU slots, that is a total of six GPUs.

Supermicro has additional options for the GPUs so if one wanted to use something other than NVIDIA’s highest-end that can be accommodated as well.

Air from the high-speed front intakes is passed down through the chassis for cooling, with a small channel reserved specifically to bring fresh air to the CPU. The extra space to the left of the left GPU shown below helps mix fresh airflow as the two GPUs on the left side are slightly offset.

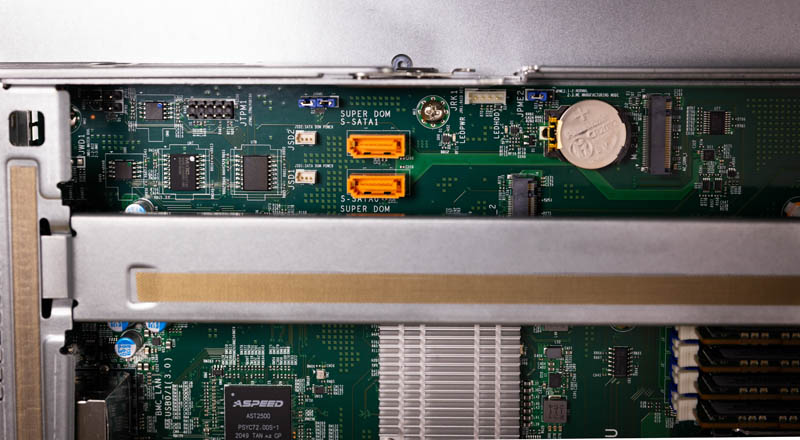

Along the other side of each node is some SATA ports supporting DOMs as well as M.2 slots supporting both NVMe and SATA. These allow you to have internal fixed boot media while saving the precious 2.5″ U.2 NVMe bays for general storage.

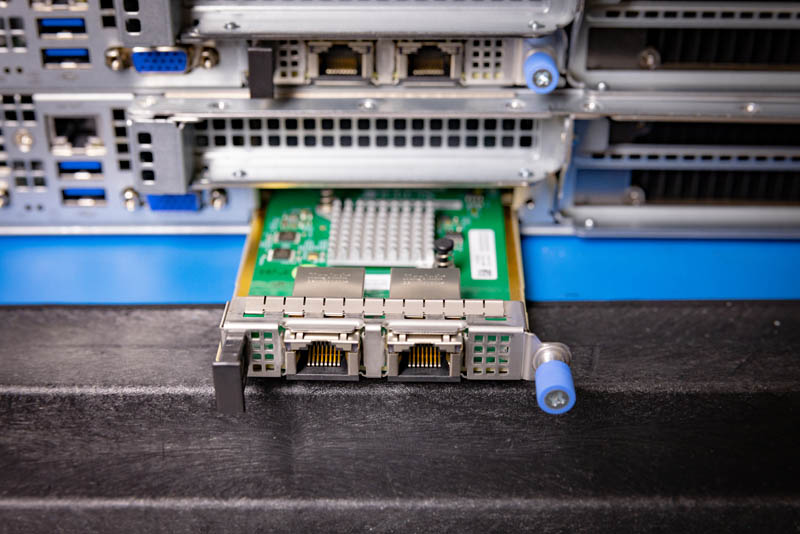

Onboard networking is once again provided by Supermicro’s AIOM modules. These OCP NIC 3.0 compatible modules can be swapped directly from the rear of the chassis without having to remove the entire node. Our demo system had a pair of 10Gbase-T ports but there are other options available to customize networking to fit whatever deployment is being used.

The rest of the rear IO is fairly standard fare, with a dedicated BMC NIC, serial port, VGA, and a pair of USB ports. The AIOM module is the main source of networking on this platform. That somewhat makes sense given that this can be deployed both for remote workstation or server duties.

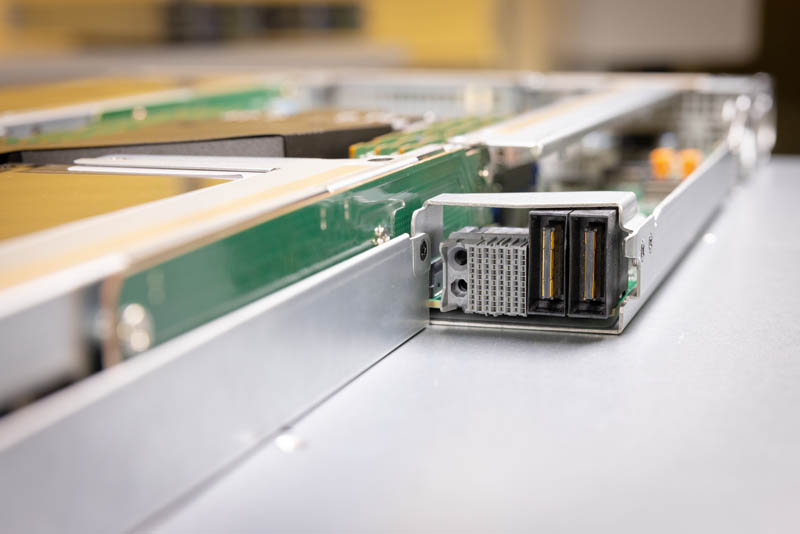

The BigTwin routed all data and power over a single large high-density connector. This is a big upgrade versus some of the older gold finger PCB connectors we saw. Supermicro is taking this advancement and bringing it to this platform as well. This may seem like a small feature, but it matters to ensure that these nodes remain serviceable.

Overall, this is a system that was interesting because the impact of the solution did not immediately present itself. Once we learned more, the value proposition makes a lot of sense. We will get to that next.

Market Impact

One of the big misses we had on-site was the target market. Something we knew is that this is actually a popular platform for Supermicro. Our original hypothesis was that sharing chassis, fans, and power supplies would significantly lower costs. While that is a big driver, after we did the on-site, we learned about the customer(s) for this and what they are doing. At around 05:15 in the video, you can see we actually had to splice in a part to address this.

It turns out that the SYS-210GP-DNR has an interesting usage model. A very large studio (we cannot name the customer, but it is a very well-known large studio) is using these nodes for two purposes. The primary purpose is to provide remote workstations. One can use higher clock speed/ high core count CPUs along with the attached GPUs for very powerful remote desktops. In these large studios, the data sets can be enormous rendering footage so having remote artists and editors is challenging since WAN bandwidth and latency can be an issue. Instead, these systems are centralized.

That allows two major usage models for the studios. First, the workstations can be accessed on an as-needed basis, so artists around the world can potentially use the same hardware due to working across different time zones. With expensive CPUs and GPUs, these are not average VDI systems. Second, and perhaps more interestingly, these nodes can also be deployed in the evenings as servers to help with rendering animations as part of the broader cluster.

This system effectively has a few major benefits:

- Cost savings from the shared chassis design

- Easier maintenance than 1U GPU servers with the fixed chassis

- Lower power consumption by using larger fans and shared power supplies

- Providing lower-cost high-end remote workstation pools

- Providing a hybrid model of high-end workstation and cluster server

Those are the key drivers over using standard 1U servers for this type of application. Since we missed this, we wanted to address it here.

Final Words

The SYS-210GP-DNR is targeted at hybrid server and workstation workloads. This differs from other GPU servers on the market such as the HPC-focused Redstone server we looked at with NVLink. As GPUs have become more commonplace in different domain areas, we are starting to see servers evolve with them. This is a great example where large studios and even to some extent pandemic-related workplace changes are driving evolution in server design.

Once again, a big thank you to Supermicro for letting Patrick rummage about their demo room. This is the last one in this series, so we hope you have enjoyed them!

Probably a silly question, and 100% not what this unit was intended for but:

Is there any reason why instead of loading it with GPU’s I couldn’t load it with (for example) Fibre Channel Cards?

I work in an industry where a machine will have half a dozen fiber channel cards/ports in it to connect to multiple tape libraries and storage arrays, and we have many of these machines. As long as small “extension” cables were passed through from the GPU’s in the middle I don’t see why we couldn’t use one of these to get multiple machines in a smaller form factor.

@Harvey, is there something about your application(either raw bandwidth or particular devices being very, very, fussy about sharing) that keeps you from just using the fact that fiber channel treats switched fabric as a pretty standard topology?

I would suspect that this thing could support the multiple HBAs, given that GPUs are probably the PCIe devices with the most oddities, along with being fairly demanding; I’m just a little surprised that you would need to stuff the system that full of HBAs rather than using just enough ports to connect to the switch, ideally with some redundancy.

As for the use of these as remote workstations; has the industry coalesced around one option as the de-facto standard for remote keyboard/video/mouse in cases where artifacts and nagging lag just aren’t acceptable(like graphic artists using tens of thousands of dollars worth of GPUs) at rangers greater than ‘just use high quality displayport cables’ covers; or is that still up in the air with multiple contenders?

@fuzzyfuzzyfungus: We use the machines as ESXi hosts, and an average machine will have 2-3 dozen VMs on it (most usually suspended). It’s not possible (at least to my knowledge) to have a single card be shared between multiple machines, so each powered VM needs it’s own dedicated PCI card in passthrough mode. We do make extensive use of switches in our network, as our main datacenter has about 40 tape libraries in it.

And in case you were wondering, the feature of ESXi that lets you assign “virtual WWN Fibre Channel” to a VM, does not work with tape libraries and is storage array only which isn’t particularly useful for us as arrays are a very small part of our work.

@Harvey: In almost any modern hypervisor one can leverage on a technology called Single Root IO Virtualization (SR-IOV) which explicitly does what you are suggesting. Basically it consists on the PCIe device being able to split itself (virtually) in multiple functions (the part of the PCIe address after the dot) you can individually pass through to multiple VMs at the same time.

Then the physical device is capable of scheduling the instructions coming from each PCIe virtual function slot at hardware level, therefore with real low performances penalties. Ofc this can be hammered hard with all the VMs requiring full bandwidth at the same time, but for most applications the overhead is negligible.

This should give you what you need and considering that some of such cards allow for 32-way splitting or more, the consolidation you can get from such approach can be huge.

Have a look on google for “SR-IOV ethernet card with FC” and you should get some great examples. Consider that ESXi sometimes gets a bit picky on hardware support, so maybe you can start the search by “SR-IOV ethernet card with FC ESXi” to further restrict the results.

Hey Mr. Will,

Thanks for sharing such useful information with us, But as I can see your post is a little bit old. But still, you’ve successfully managed this article to be a great piece of work.

– Best Regards.