Burgeoning is not a word we use often at STH, but it aptly describes Intel’s new Lewisburg PCH options. We have moved from a world of 1-3 main PCH options for the two server generation between the Intel Xeon E5-2600 V1 and V4. The Intel Xeon Scalable Processor Family “Lewisburg” PCH family will be made up of seven SKUs, potentially more.

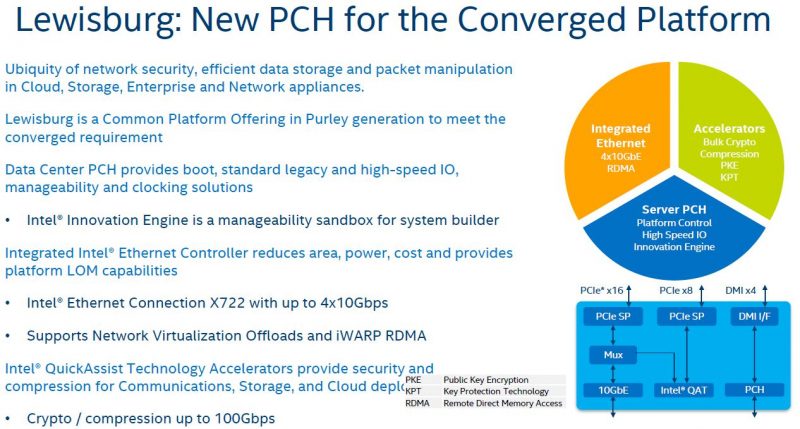

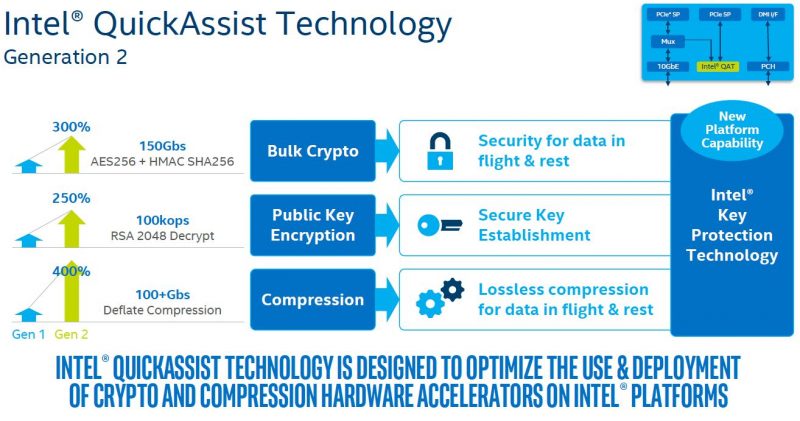

The new Lewisburg PCH family has some significant improvements. First, the back-haul to the CPU has essentially twice the bandwidth at a minimum, and up to several times more bandwidth depending on the system design. Second, for the first time up to 4x 10GbE can be integrated on the PCH, with RDMA capabilities. Third, a new and faster generation of QuickAssist is coming to the PCH. Finally, there is a new “Innovation Engine” that will be available to OEMs.

Intel Lewisburg PCH Overview and Options

Here is the overview slide for the Lewisburg PCH. The slide does a great job of outlining the main points but a key aspect is that it really describes how great the top level PCH SKU, the LGB-L is.

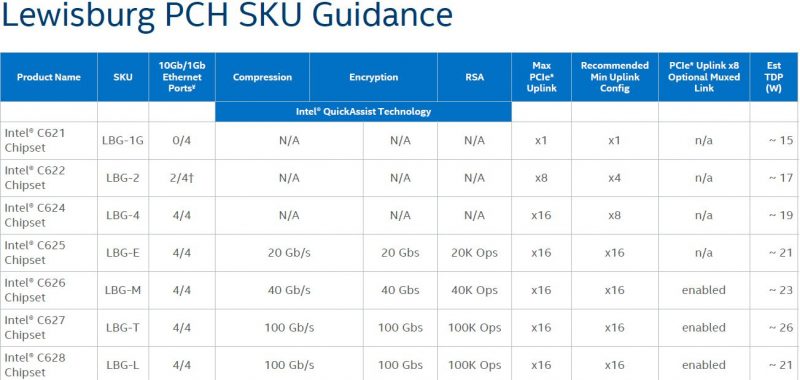

Here is the new SKU stack. Notice the seven different SKUs ranging from the low power Intel C621 LBG-1G 15W TDP SKU to the high power Intel C627 LBG-T 26W SKU.

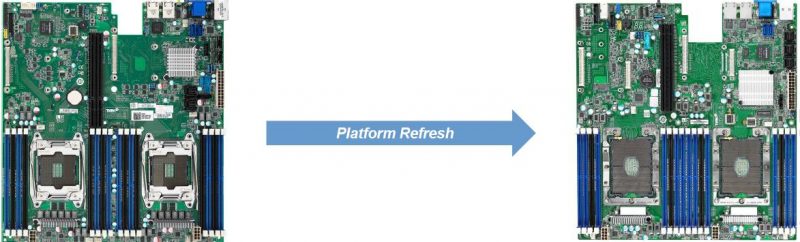

These power numbers are significant. The outgoing Intel C612 chipset had a TDP of 7W. That means that Intel is adding, a 8W to 19W of additional TDP on the PCH, even on the C621 version that has marginally better specs. You will see much larger heatsinks as a result on the new PCH chips as a result. Here is an example from Tyan just moving from the C612 to the lower power C621 or C622 chipsets on its S7106 motherboard.

In comparison, AMD EPYC platforms do not have a PCH which means that you cannot directly compare an AMD CPU TDP to an Intel CPU TDP. Coming full circle, lowering auxiliary chip TDP was a major coup for the original Intel Xeon E5 generation over the older Intel X5600 generation and is one of the contributing factors as to why Intel beat AMD around that generational change. Now, the roles are reversed and Intel has a big high TDP part on the motherboard where AMD does not.

This is a SKU stack that on one hand makes sense, but on the other, we wish it did not. For example, this would be greatly simplified if the C621, C622 and C624 were consolidated to a single SKU with 4x 10GbE enabled. Likewise, all four QuickAssist SKUs, we wish Intel just had a single SKU. While it makes sense for Intel to target different OEM needs with different parts this will hinder adoption of features like QuickAssist.

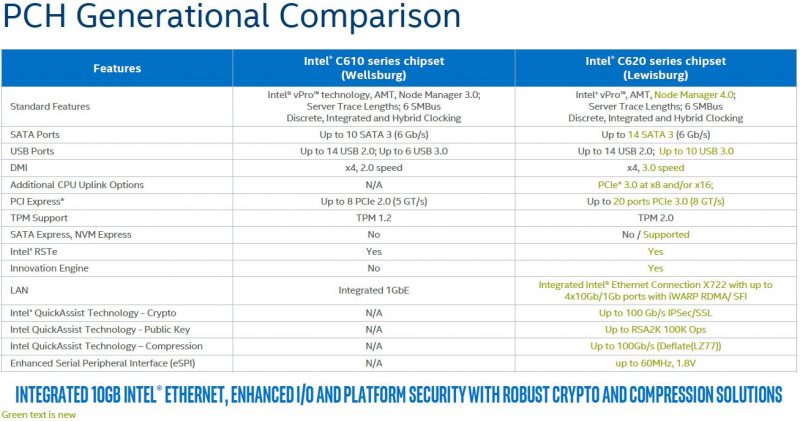

Here is the generational comparison between the C620 series and the outgoing C610 series. If you have an Intel Xeon E5 V3 or V4 system, you likely have a C612 chipset as we tested dozens of server platforms and every one of them used the C612.

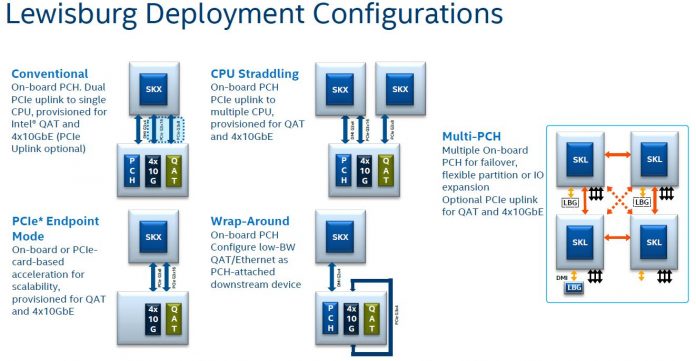

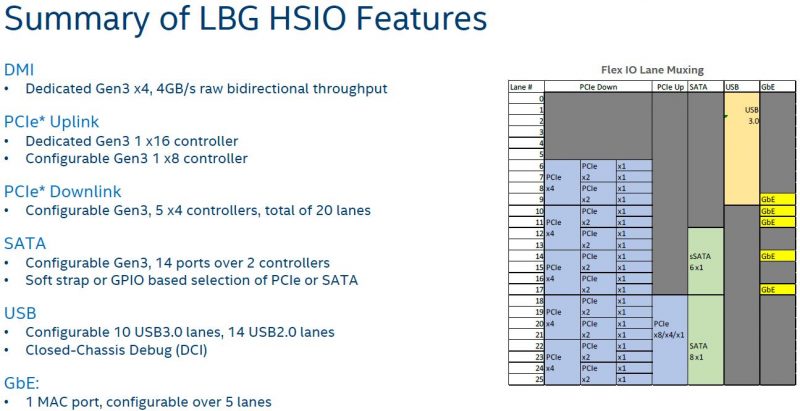

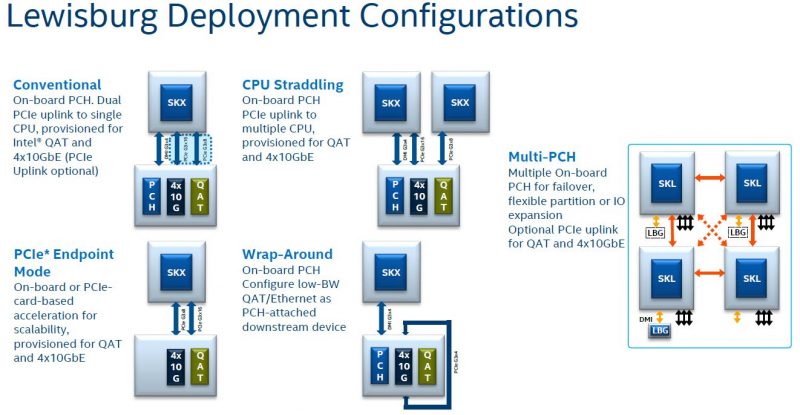

You will see a lot more on the PCH uplink capabilities in this generation than past generations. With up to 40Gbps of networking and 100Gbps QAT, one needs to have more than a PCIe 3.0 x4 uplink.

As a result, OEMs can route CPU PCIe lanes to the PCH.

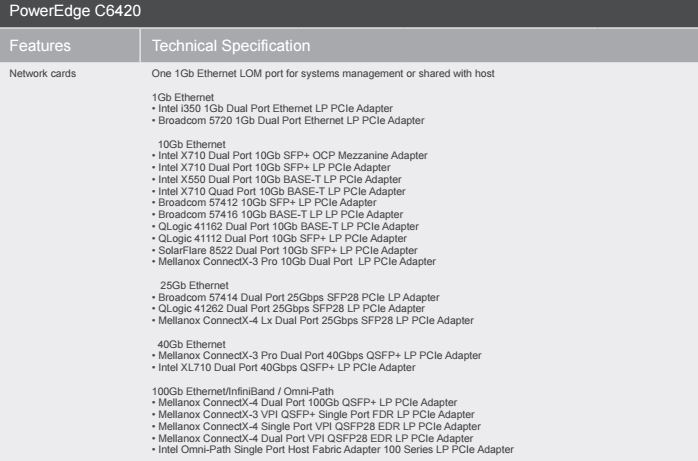

One of the major adoption factors we have heard limiting Intel X722 networking adoption was this layout. To an OEM that may need to provide different networking options to a customer, supporting full 4x 10GbE operation (plus SATA storage), will require PCIe PCB traces to the PCH. That means the system is less flexible. These are not small OEMs that are unwilling to make the sacrifice. Dell EMC, the largest server OEM is using its own networking instead of the Intel X722 PCH 10GbE to service its customers. Here is an example of what we expect to be an extremely successful Dell PowerEdge C6420 density optimized server (where integrated 10GbE would be useful with limited I/O expansion space):

As you can see, conspicuously absent is an Intel X722 networking option. That is a clear signal in terms of OEM and customer direction in the space.

Intel Lewisburg PCH Networking: Hello Intel X722 10GbE

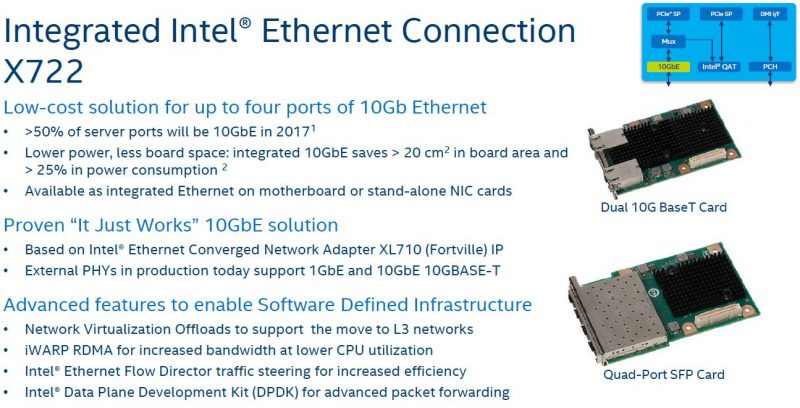

Perhaps one of the biggest changes in this generation will be the Intel X722 which will include 10GbE networking.

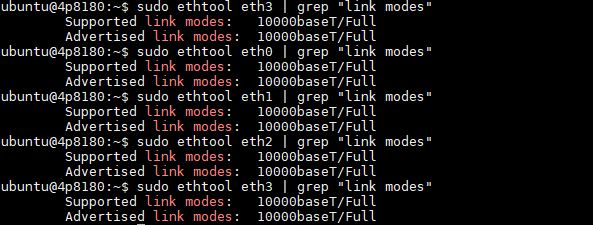

Here is an example from our 4-socket Intel Xeon Platinum 8180 test system:

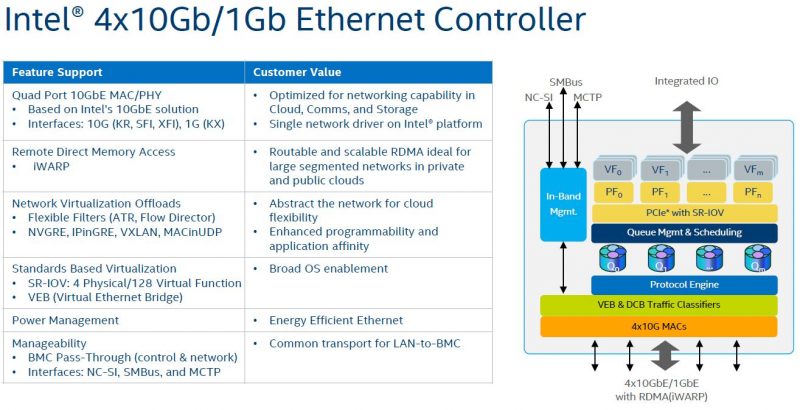

Here is the slide with the basic features of the NICs. You can see that the Intel X722 supports features like SR-IOV and important features (for onboard NICs) such as BMC pass-through.

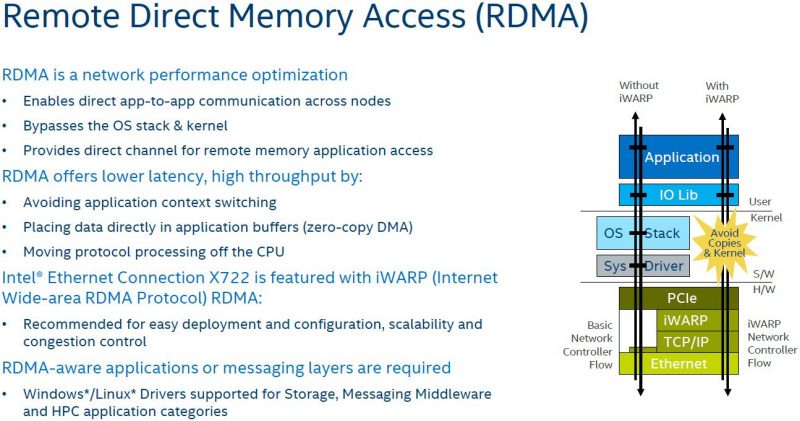

Another key feature is RDMA on the Intel X722. Intel supports iWARP instead of RCoE.

Combine this with DPDK and you can see that there is significant potential for connected devices to enhance their network throughput.

If your organization is still using 10GbE for this class of server, then this is a great option. If you are moving to a new hyper-converged infrastructure, then there is a good chance you will utilize 25GbE, 40GbE, 50GbE or 100GbE instead of 2x 10GbE or 4x 10GbE from the Intel X722.

Overall, we are happy to see 10GbE make it to the platform, but we do wish that Intel made 10GbE standard and the Intel C621 was not introduced. When a server needs to be re-deployed, needing to know which PCH version the machine has, and therefore the feature set, is an unnecessary level of complexity for operations teams.

At the end of the day, we hope this turns the tides and relegates 1GbE to management and provisioning networks in 2017.

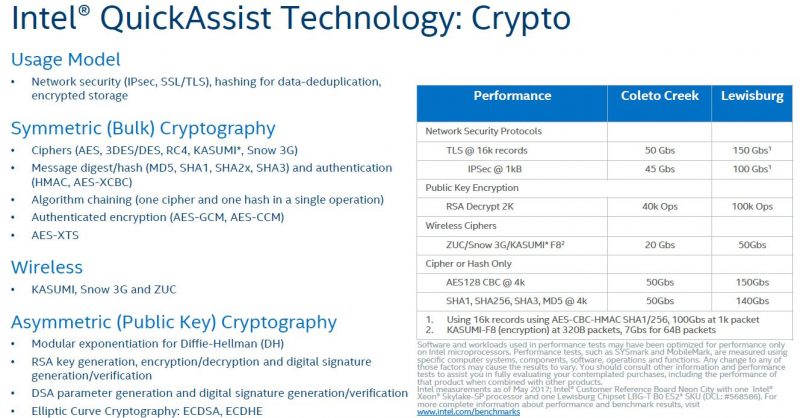

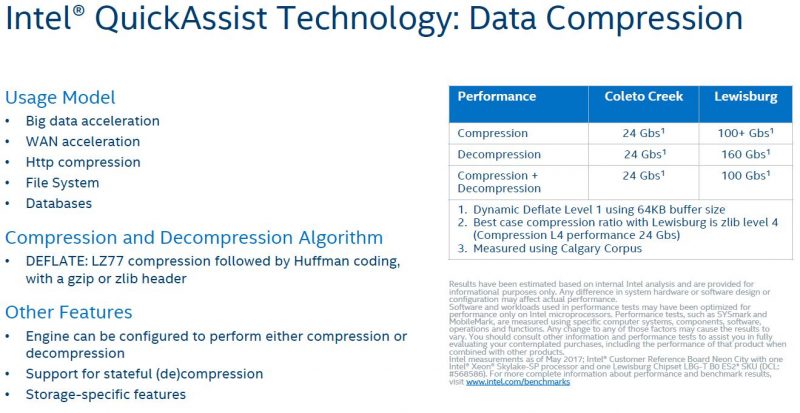

Optional Lewisburg Integrated New Intel QuickAssist Generation

Although Intel makes a big deal about Lewisburg PCH’s integrating Intel QuickAssist Technology, there are a few major caveats. Most importantly, it is not available on every chipset. Also, this new generation will require different drivers than previous versions. Intel’s slide says “Generation 2” which is not exactly accurate. The version found in Rangeley and Coleto Creek were different and required different drivers. That added installation headache. At the same time, we see the value in Intel QAT.

Two of the biggest advantages are for offloading encryption and compression. At STH, we did the only 3rd party editorial benchmarks with QuickAssist (Coleto Creek generation) that you can read about here:

- Intel QuickAssist Technology and OpenSSL – Benchmarks and Setup Tips

- Intel QuickAssist at 40GbE Speeds: IPsec VPN Testing

The new QAT crypto engine can support 100Gbps operation in its highest-end configurations.

We did not get to benchmark compression workloads the last time around, but again, the new high-end QAT enabled C620 series PCH examples can offer exceptional offload performance.

Here is the main caveat to this. If Intel wanted widespread QAT adoption, the company would have taken a minor (~2W – 6W TDP) hit and enabled it on every PCH. The technology works well but it is still far from automatic. For example, in CentOS/ RHEL one needs to unload the default QAT driver, install the QAT driver with a proper QAT version for their hardware, then ensure their software works with QAT in order to start using it. If QAT was standard in the Lewisburg PCH, those writing software would have more of an incentive to better support the technology.

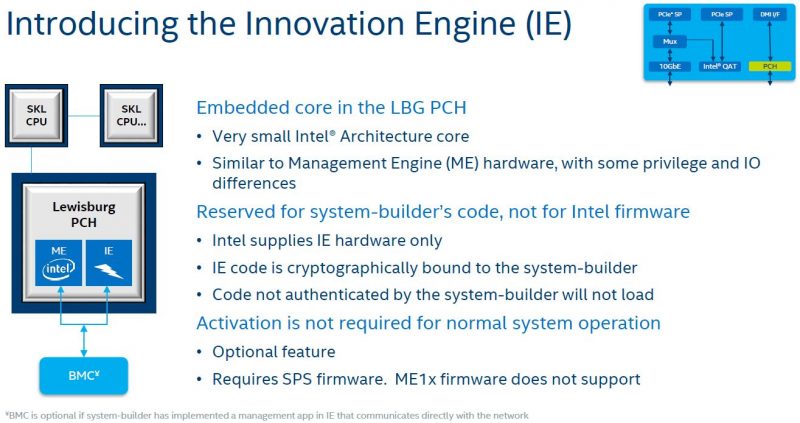

Intel Innovation Engine

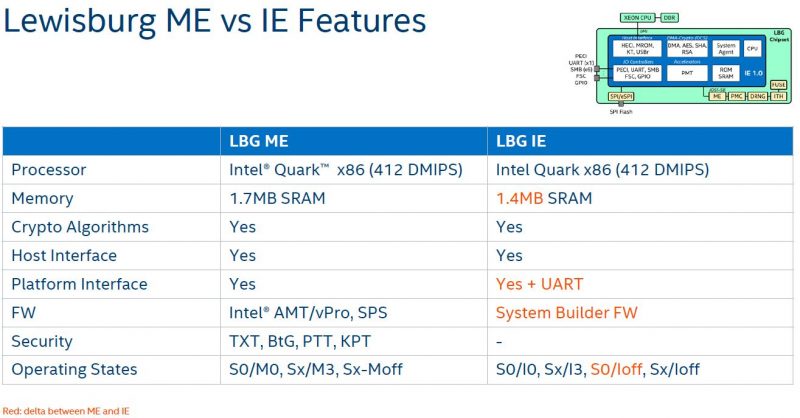

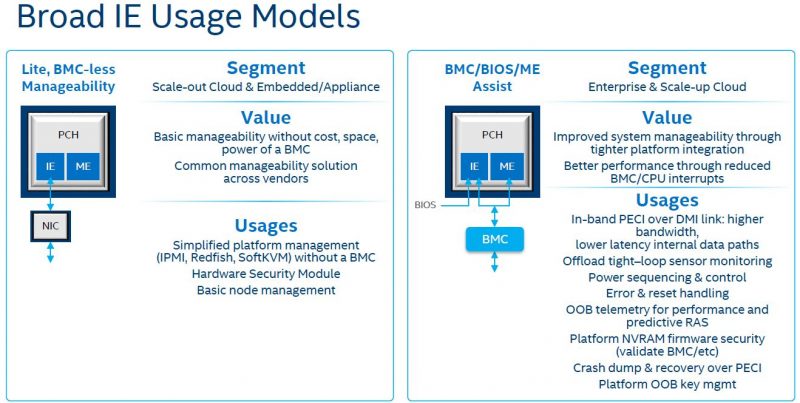

The Intel Innovation engine is a curiosity. It is a small x86 CPU engine that OEMs can leverage to add their own management capabilities.

Intel is adding this in addition to the standard management engine with tools aimed at system builders (and hyper-scale customers.)

Intel came up with several usage models and in the future, as Redfish APIs mature, it may become significantly more useful.

For now, we see most vendors using existing capabilities and an external BMC (usually powered by ARM CPUs) for management features. OEMs told us one reason for lower adoption is that this is new Intel-only feature yet their control planes must handle multiple system architectures.

Additional Lewisburg Features

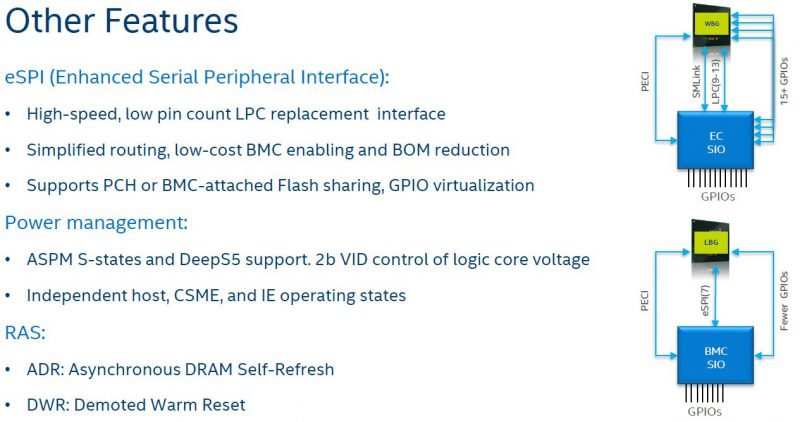

Intel shared an additional slide that we wanted to include. It was titled “Other Features”:

The Lewisburg PCH does include GPIO where that feature is found on the AMD EPYC SoC design.

Final Words

If you have a dense configuration, the QAT and 10GbE enabled PCH options are going to be stellar. OpenSSL is widely used and can support QAT out of the box now. 10GbE is a mainstream networking option. The biggest PCH feature may be one we did not discuss completely, SATA lanes. By pushing lower speed SATA III lanes to the PCH Intel is not “burning” PCIe lanes for SATA devices like AMD does with EPYC. Both implementations have advantages and disadvantages but there are merits to the PCH approach. In the dozens of Skylake-SP platforms we have handled over the past few months, one of the first things we noticed was the size of the PCH heatsinks. They are absolutely huge compared to the C602/ 612 generation.