Today Amazon AWS EC2 instances were announced with the Intel Habana Gaudi training cards. We have been tracking Habana Labs for some time. As it became clear, Habana was part of a bake-off at Facebook, and it beat Intel Nervana chips. We discussed a bit more in Favored at Facebook Habana Labs Eyes AI Training and Inferencing. After Habana won the bake-off, Intel Acquired Habana Labs Stoking its AI Efforts and Intel shuttered Nervana training cards.

Amazon AWS Announces EC2 Intel Habana Gaudi Instances

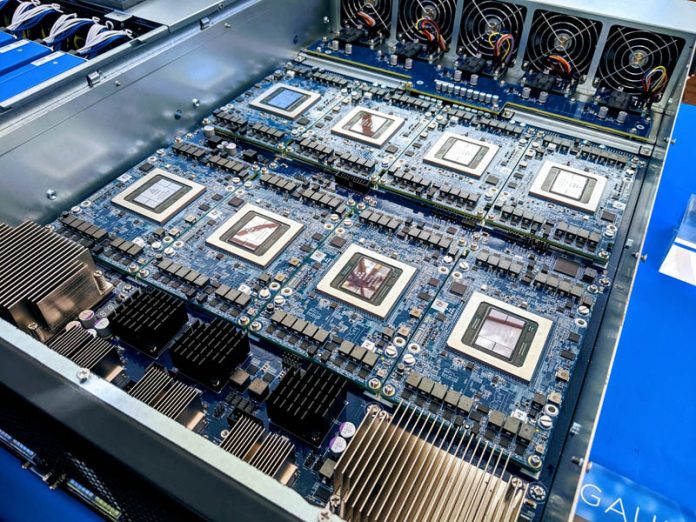

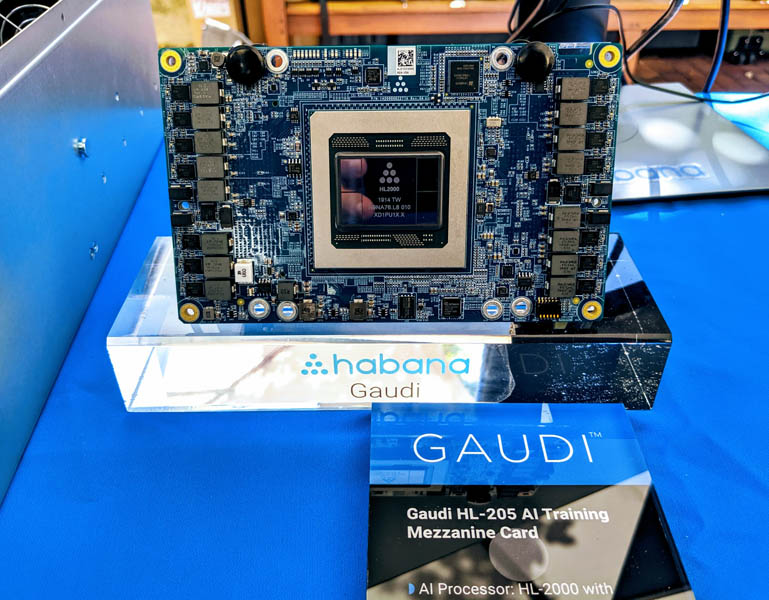

As part of its AWS reinvent 2020 conference keynote, the company announced a new AI Training accelerator coming to the cloud “in the first half of 2021.” Generally, the “first half” of a year means Q2 in marketing translation. This is a big deal since it is another major cloud provider signing onto the Habana platform joining Facebook. Although Amazon did not say which version of Gaudi the company was using, Intel’s press image was of the Open Compute Project OAM/ OAI version. Instead of using a press rendering, here is the actual Intel Habana Gaudi HL205 OAM card live.

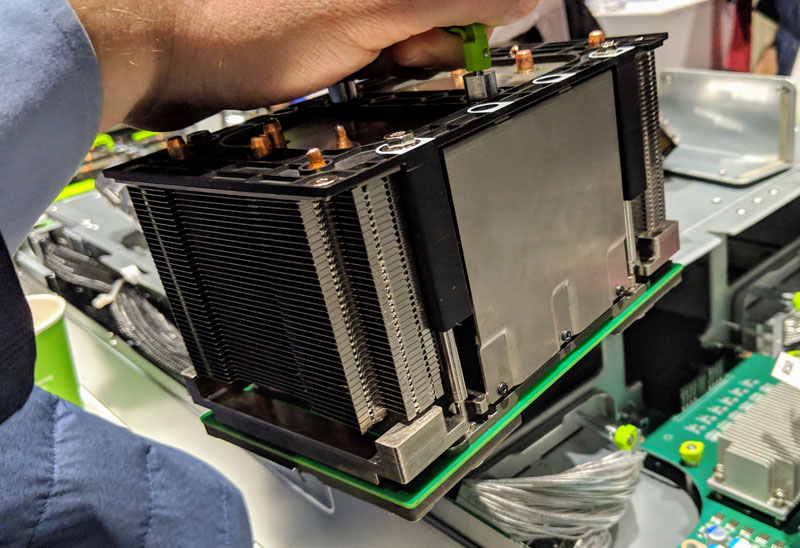

An OAM module is designed for massive cooling atop the unit, similar to what we saw in our Inspur NF5488M5 Review a Unique 8x NVIDIA Tesla V100 Server using NVIDIA parts. Hee is a Facebook Zion system’s OAM cooling (air) board we got to see at the 2019 OCP Summit when we covered the Facebook OCP Accelerator Module OAM Launch and the Facebook Zion Accelerator Platform for OAM. We also recently covered the OAM topic in our OCP China Day 2020 Interview with Bill Carter and Shen Rong of Inspur.

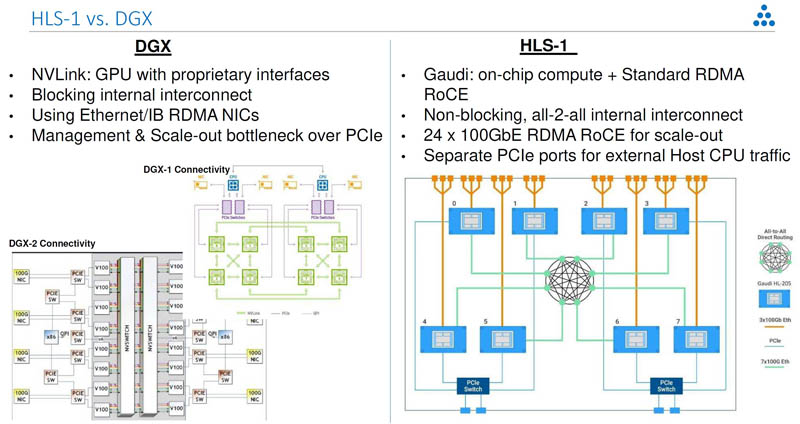

Key to the OAM form factor is a ton of high-speed and high-density I/O. Gaudi uses that for host connectivity and for providing its high-speed RoCEv2 Ethernet interconnect. For cloud providers, we hear using 100GbE can be preferable since it is an easier integration to infrastructure than using Infiniband.

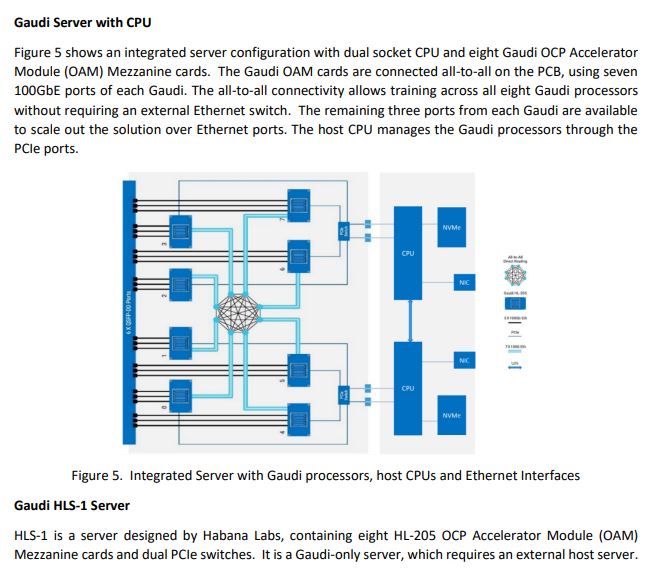

The cover image to this article is the 8x GPU OAM server. Habana discusses and shows the above training server infrastructure in its whitepaper.

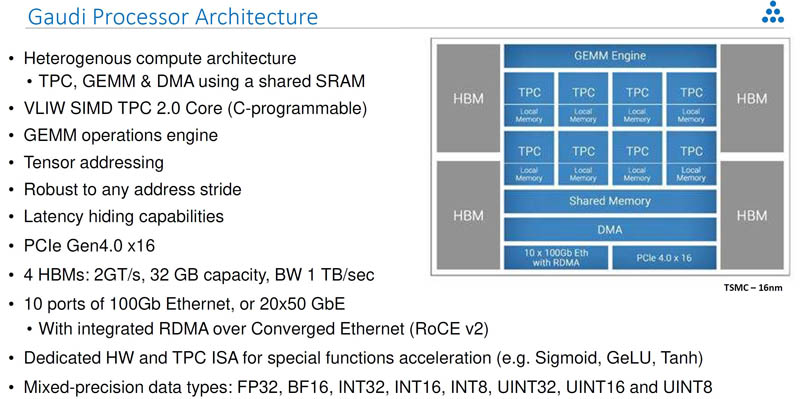

Getting into Gaudi, here is the high-level view. This is another PCIe Gen4 architecture with HBM as we would expect from a mid-2021 solution. Each Gaudi processor has 10x 100GbE ports or 20x 50GbE for 1Tbps of Ethernet bandwidth.

One of the big advantages Intel Habana is showing with the HLS-1 with Gaudi AI accelerators is that it is not using NVLink with proprietary NVSwitches. Instead, it is using more commoditized interconnects.

While based on the announcements that Intel is making with AWS, the EC2 Habana instances are likely to be OAM based. Still, for those wondering, there are Gaudi HL-2000 PCIe cards as well.

These have been shown in servers for over a year as we saw them at Hot Chips in 2019.

Intel has not been pushing its Habana portfolio in public, however, releasing these types of PCIe Gen4 training cards alongside a late Q1/ early Q2 Ice Lake Xeon launch makes a lot of sense.

Final Words

Overall competition in the industry is good. We see Intel Habana with 32GB Gaudi training chips. The AMD Instinct MI100 also has 32GB. NVIDIA just announced the NVIDIA A100 80GB. There are also others such as Graphcore GC200 IPU out there that focus on similar areas. Of course, the big one we want to see in AWS is probably the Cerebras WSE. Check out Our Interview with Andrew Feldman CEO of Cerebras Systems for a bit more on that solution as something totally different in design. Alongside this announcement however, Amazon did something that was clearly deliberate. Amazon also announced AWS Trainium its custom-designed training chip offering. For Intel, the EC2 keynote announcement was a mixed message. Amazon is saying it is deploying Habana while at the same time is saying it thinks it has enough of a better way to address the market that it is launching its own chips.

Hopefully, 2021 will be more competitive in the AI training space as the competition is coming from some very large companies other than Google.