At AWS re:Invent 2021, the company actually went into one of the fundamental changes it used to accelerate innovation: its Nitro cards. Drawing the closest comparison we can, these cards are most similar to DPUs that are starting to become more prominent in the industry, but AWS was deploying them at scale in 2017. Still, this is the model that the industry will need to adopt so it is worth looking at what drove the change.

AWS Nitro the Big Cloud DPU Deployment Detailed

AWS is on a path to build its own chips. While some think that the simple reason is cost, we are actually going to get into why it goes beyond cost and instead is about delivering capabilities before the rest of the industry.

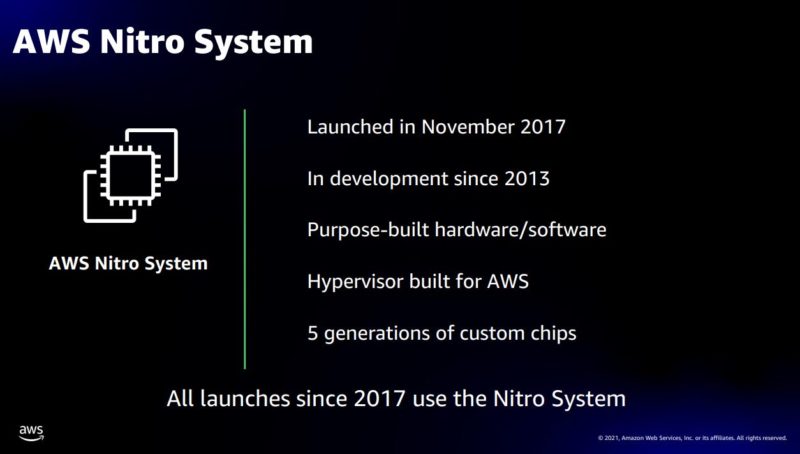

We have discussed AWS Nitro many times on STH and the unique capabilities it provides. Key here is that it has been a cornerstone of AWS for the past four years. Putting that into perspective, the capabilities Nitro provides are still not replicated for private clouds.

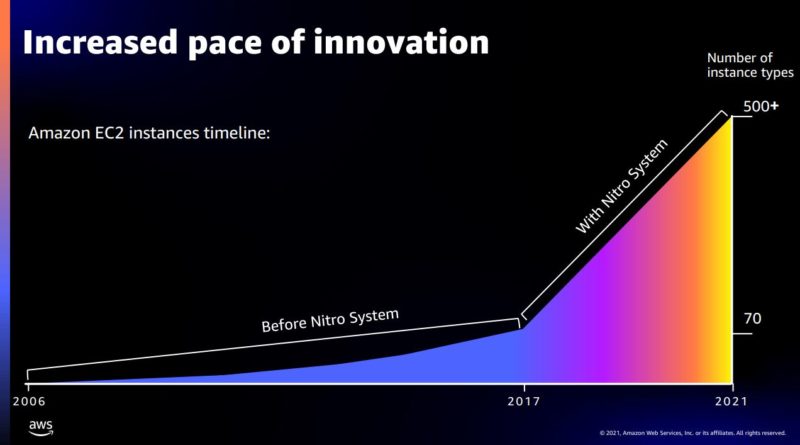

The impact has been huge. Nitro has enabled AWS to rapidly expand the types of instances it can provide by being a common point to manage hardware.

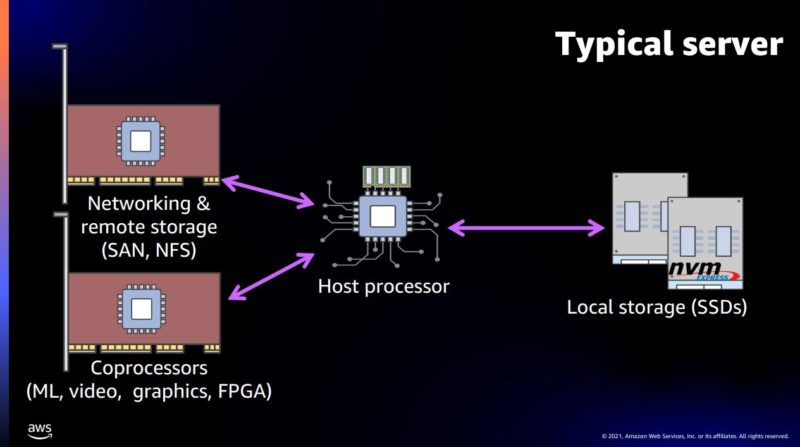

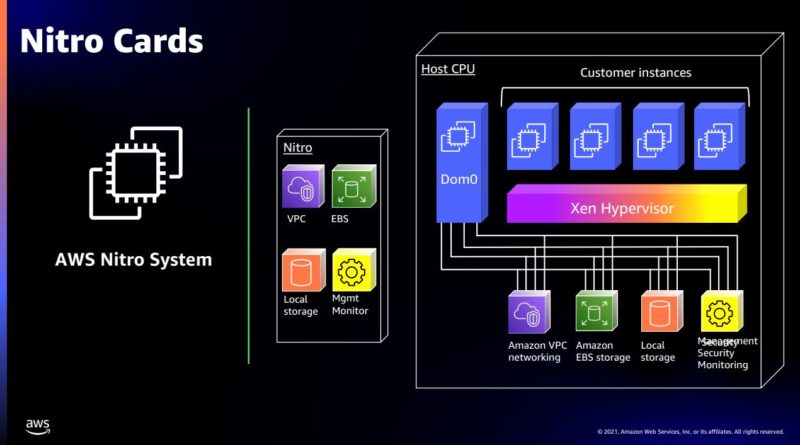

Taking a step back, here is AWS’s diagram of a standard server. One can see the various components of the server, and probably one of the most interesting PCIe connectors ever rendered.

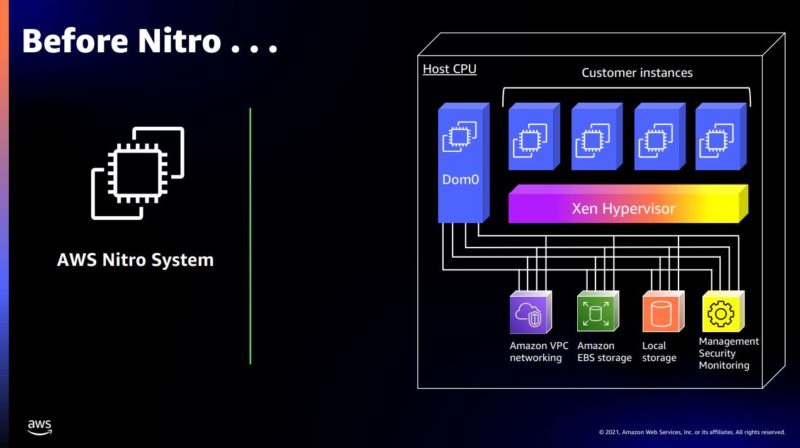

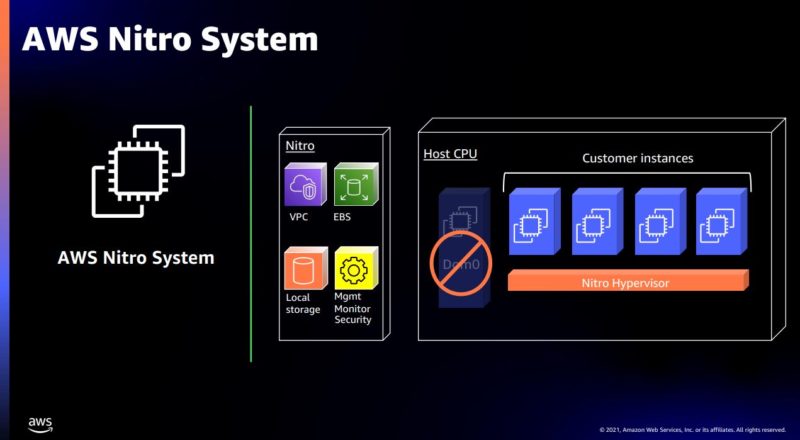

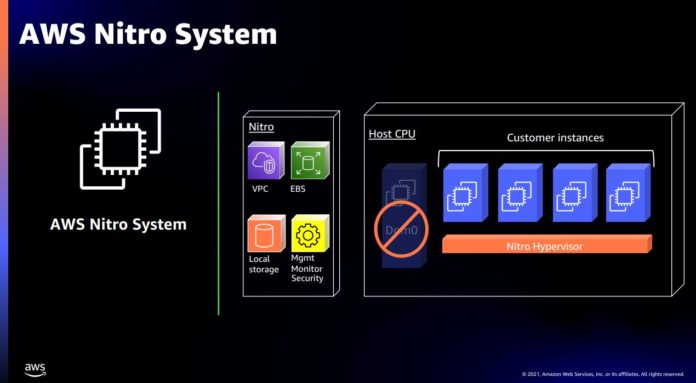

Prior to the Nitro system, AWS utilized the Xen hypervisor. The key here is that the VPC networking, EBS storage, local storage, and management functions all happen on the dom0 level. An easier way to look at this is that the same CPU that is being provisioned for EC2 instances is also having to handle the networking, storage, and management.

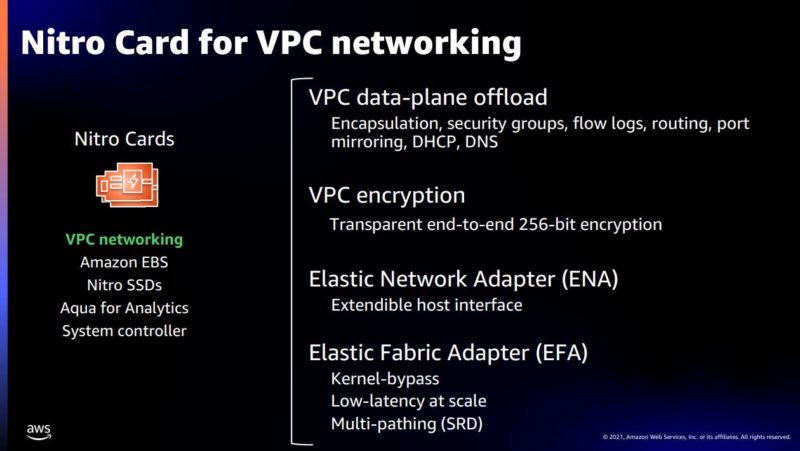

One of the interesting bits in the re:Invent presentation is that there are several types of Nitro cards. There are cards for networking, EBS storage, SSDs, Aqua for Analytics, and also a system controller. Looking at the VPC networking side, there are several key features. First, the data plane is offloaded to the Nitro card as well as the end-to-end encryption. One of the key capabilities here is that the Elastic Network Adapter (ENA) is presented by the Nitro card. This ENA can scale from 10Gbps to 100Gbps on the same driver to help in portability in AWS’s cloud. If you know Intel’s Ethernet drivers changing for generations as an example, that is quite an accomplishment. The Elastic Fabric Adapter is a high-performance option more for HPC-style workloads.

Nitro cards effectively are designed to take the VPC networking, EBS storage, local SSD storage, and the management controller functions from the hypervisor.

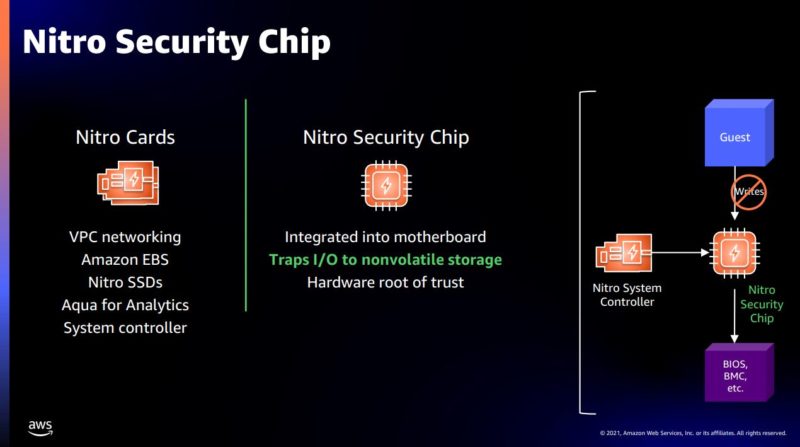

On the security side, the Nitro security chip is very important. One of the big functions is to block unauthorized writes to the nonvolatile storage. While one may immediately think this means local SSDs, the more impactful way to think about this is all of the small stores in a server. For example, the BMC has its own flash storage. Also a fan controller can have its own storage for the microcontroller. In an environment like the AWS cloud, AWS cannot afford for an attacker to compromise microcontroller storage. Also, it allows AWS to do things like push firmware updates without having to impact customers running workloads on a server.

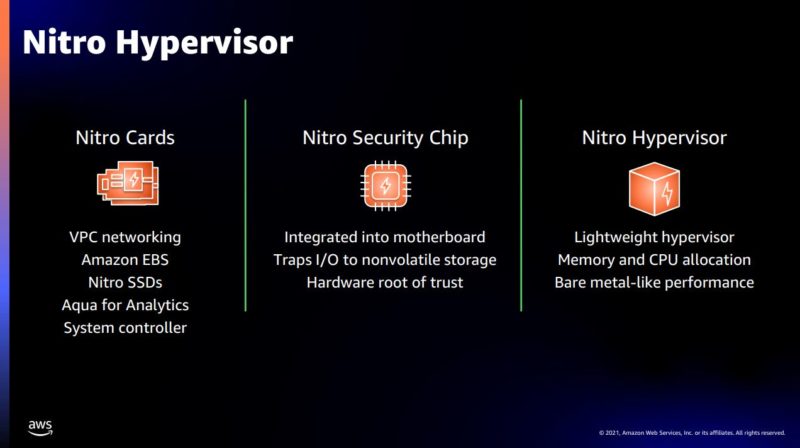

Another part of AWS’s magic is that they stopped using Xen and use the lighter-weight Nitro hypervisor.

Since AWS is using Nitro cards for the functions we discussed earlier, it no longer needs to have the host CPU handle those. That does a few things. First, the functions in dom0 are offloaded so the hypervisor can be focused on just providing CPU and memory provisioning. The second impact is that cycles that used to be consumed by the CPU are now offloaded to the Nitro.

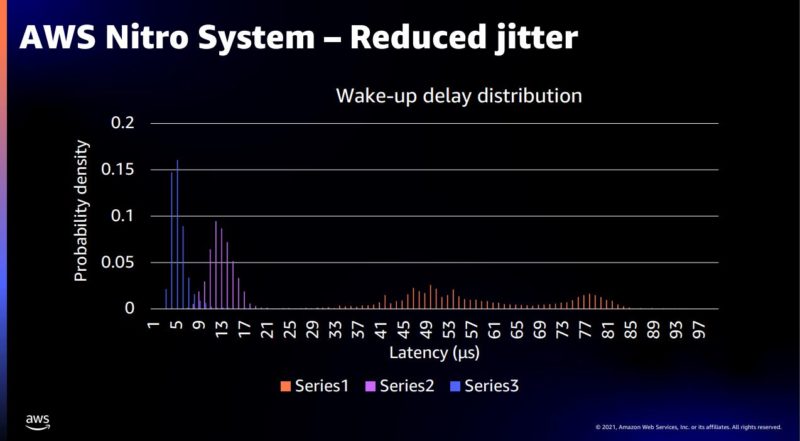

Offloading the dom0 functions reduced jitter. The “Series1” below is a c4 generation instance pre-Nitro. “Series 2” is c5 generation with Nitro. “Series 3” is Graviton with Nitro.

So there are performance benefits for AWS in getting rid of the old Xen setup and instead moving to the Nitro solution.

Final Words

The key takeaway here is really the gap between what AWS is doing and what the private clouds of the world are doing. The promise of DPUs is that they can bring this operating model to clouds. The Intel Mount Evans was designed to bring this functionality to Google Cloud. What is clearly missing is the solution for the rest of the industry. This is a big miss that the HPE, Dell EMC, and Lenovo and other vendors in the industry need to close the gap on. The NVIDIA BlueField-2 is a step in this direction but the software support is still early. VMware Project Monterey is hoping to bring this to VMware’s ecosystem. Support for this functionality needs to go beyond VMware and into OpenStack and other clusters. The challenge is that AWS Nitro is the leading practice in the industry and AWS is four, going on five years ahead of the industry.

The big challenge, of course, is that just as AWS is able to add instances such as Apple Mac Mini nodes (AWS added M1 at this re:Invent), greatly increasing the types of instances it offers, adding DPUs is a bigger challenge for the big hardware providers. On one hand, they need the functionality to stay relevant. On the other, adding DPUs take away a lot of their differentiation. This is a big engineering challenge, but is one that will need to be tackled for the industry to thrive.

Adding this amount of specialization, at the hardware level, seems like it significantly increases the amount of differentiation available; it’s just less obvious who will be in the position to capture it.

In the case of these Nitro devices; it’s clearly Amazon(unsurprisingly): they lower the amount of vendor cooperation required to add pretty much any remotely normal node that has a PCIe interface to talk to as one that looks much like the rest from the perspective of their management systems; and they implement accelerated versions of things that are really only useful if you are also operating your networking, networked storage, and the like exactly as AWS does.

What will be interesting to see is how things shake out in terms of who will end up pushing(and who will end up actually succeeding) in the case of DPUs not being being built in-house by people whose infrastructure is large enough to have a gravitational field of its own.

Is it going to be a push from the side of hardware vendors who make servers and at least one type of other datacenter equipment(eg. Dell positions the ‘PowerEdge Fabric Storage’ device as digital-transformation-cooler-and-better alternative to the PERC; and did we mention that, while capable of generic iSCSI and NVMEoF, it sure works even better with these EMC products you really ought to take a look at?; HPE helpfully mentions that only Proliant GenX systems have NICs with deep integration into Aruba Network Analytics Engine; for a network-wide single-pane-of-glass solution”?

Is it going to be specialty outfits looking to make inroads on the strength of the efficiency their very specialized offerings offer vs. standardized commodity options(eg. Fungible selling you first on the capabilities of their storage appliances; naturally leading to your servers using their DPUs to best take advantage of them)?

Will some combination of self-preservation on the part of software vendors that offer virtualization and orchestration mechanisms, and fear of lock-in on the part of customers, lead to an outcome where DPU vendors effectively have to strive to be a largely silent drop-in replacement for one or more of the components that customers are currently handling in software; with attempts to push development in the direction of their proprietary mechanisms largely rejected by people afraid of some DPU vendor managing to make their environment the CUDA of networking and then start licensing and pricing like they are pretty much your only option and you both know it?

Hey Patrick, you missed one of the bigger effects of Nitro, the effect on the bottom line. With Nitro offloading all this work and management overhead the expensive CPUs that AWS buys from Intel, AMD, etc. are now 100% charged back to the customers. In a small datacenter this difference isn’t worth noticing. At the hyperscales we are talking here it means lots of money to invest in other things, or return to the business.