Samsung is taking an early lead with its new CXL memory module. In 2022 we are going to see a transition of the industry to PCIe Gen5 and CXL or Compute Express Link. With CXL, we are going to see a new capability to extend memory pools beyond traditional DRAM channels and onto CXL busses that can be accessed directly by both CPUs as well as accelerators. That is a key market for the Samsung CXL Memory Expander.

Samsung CXL Memory Module

Samsung did not give the exact specs of its CXL Memory Expander other than saying it is DDR5 and CXL. We however got a great shot of the device.

This is an E3 2x form factor that is intended to take over for 2.5″ U.2/ U.3 drive bays in the next generation. The connector on the left side is a PCIe x16 connector. CXL operates atop PCIe Gen5(+) lanes so we expect to see a common connector here. This same connector can be used for PCIe accelerators as well as SSDs.

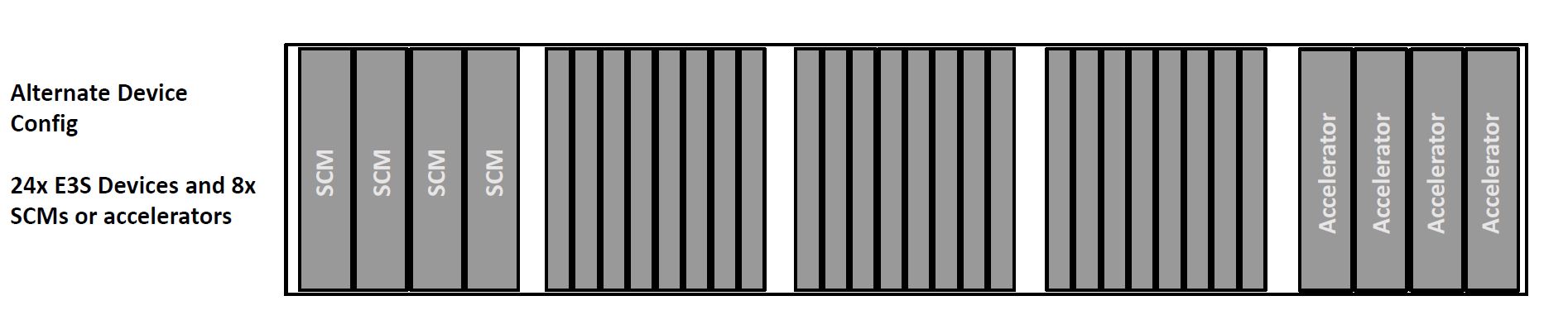

The above shows an example where one can see SCM which is Storage Class Memory along with accelerators in some of the thicker E3 form factors. Samsung is looking to add CXL-based DDR5 to the mix in these slots as well so we will likely see volatile memory as well as SCM, accelerators, and SSDs in these bays.

Final Words

While Samsung focused on the DDR5 and CXL aspects, the bigger implication is with system design. Traditional 2.5″ form factors are going to be replaced soon with the E3 (and to some extent E1) form factors in servers. This allows for much higher densities. With E3.L we will see up to 44x E3 devices in a 2U server greatly enhancing density for SSDs while also enabling cool technology such as the Samsung CXL Memory Expander putting DDR5 in much higher quantities into servers. Samsung is already hinting that this can enable 1TB of memory for HPC and AI applications.

Get ready for the CXL future, it is going to be really different.

Just as I said: It’s going to be very easy to install large capacities of DRAM with CXL. No more capacity advantage for Optane means no future for Optane.

That’s why micron is moving from 3DXpoint to CXL DRAM. Persistence is not a problem for typical application like SAP HANA that have nice log writing management (only need APS for few minutes). Price is also not that big of a problem as software like SAP HANA usually costs much more than the memory it runs on…

Maybe I’m just old; but is anyone getting a weird sense of deja vu seeing memory expansion back in a form factor intended to be interoperable with storage expansion and based on a bus that also handles general-purpose peripherals?

It’s like PCMCIA has come back for another go; only 30 years faster.

I hope GPUs move to this interconnect or something similar. Moving the biggest heat generator away from the mobo would be a win. Imagine if the GPUs could be mounted in the PC or server case with heat extraction in mind.