Many years ago at STH, we had a brief Cluster-in-a-Box series. This was back in ~2013 when it was just me working part-time on the site and we did not have the team and resources we have at STH today. It was also before concepts like kubernetes were around and the idea of a full-fledged Arm server seemed far off. Today, it is time to show off a project that has been months in the making at STH: the 2021 Ultimate Cluster-in-a-Box x86 and Arm edition. We have over 1.4Tbps of network bandwidth, 64 AMD-based x86 cores, 56 Arm Cortex A72 cores, 624GB of RAM, and several terabytes of storage. It is time to go into the hardware.

Video Collaboration

This project, or more specifically, why it is being released today, is because it is being done in collaboration with Jeff Geerling. I often hear him referred to as “the Raspberry Pi guy” because he does some amazing projects with Raspberry Pi platforms. While I was in St. Louis for SC21, you may have seen me at the Gateway Arch. We filmed a bit there and then Jeff dropped me off at the Anheuser-Busch plant that made a cameo in the recent Top 10 Showcases of SC21 piece. Our goal was simple: build our vision of our cluster-in-a-box.

As a bit of background, the cluster I had was a project from late May 2021 that you may have seen glimpses of on STH. The goal was to test some new hardware in a platform that was easy to transport since I knew that the Austin move would happen soon. Over the course of the move, we never had the chance to show this box off. Jeff had the new Turing Pi 2 platform to cluster Raspberry Pi’s, so it seemed like a challenge. He went for the lower-cost version, I went for what is probably one of the best cluster-in-a-box solutions you can make in 2021.

The ground rules were simple. We needed at least four Arm server nodes and it had to fit into a single box with a single power supply. To be fair, I knew Jeff’s plan before we filmed the collaboration, but he did not know what I had sitting in a box in Austin ready for this one.

For those who want to see, here is the STH video on the ultimate cluster in a box:

Here is Jeff’s video using the Turing Pi 2:

These are great options to watch to see both the higher-end of what is possible today along with the lower-end. As always, it is best to open these in a new browser window, tab, or app for the best viewing experience.

Building the Ultimate x86 and Arm Cluster-in-a-Box

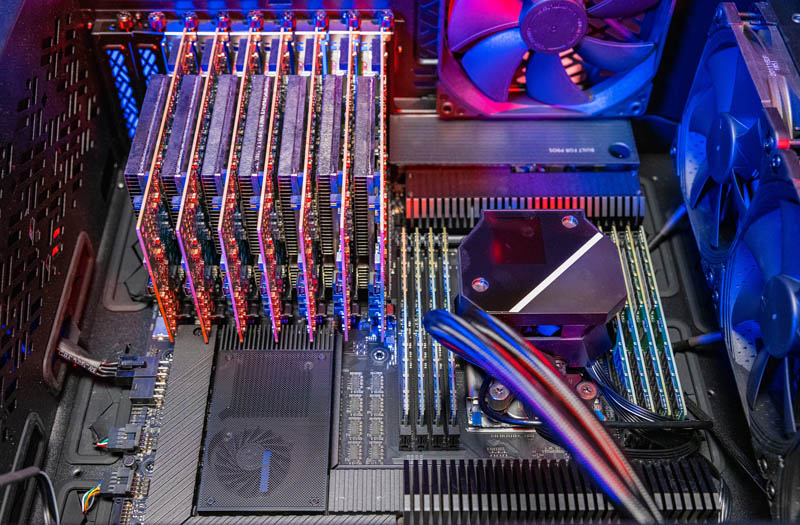

Let us get to the hardware. First, on the x86 side. For this, we are using the AMD Ryzen Threadripper Pro platform. Usually, this platform has the Threadripper PRO 3995WX that is a Rome-generation 64-core, 128 thread “WEPYC” or workstation EPYC. Sadly, the only photos I had were with the 3975WX and 3955WX in this motherboard before the cooling solution arrived.

The system is using 8x 64GB Micron DIMMs for 512GB of DDR4-3200 ECC memory. This was the platform where one decided to pull an Incubus “Pardon Me” and burst into flames. Micron, to its credit, saw that on Twitter and sent a replacement DIMM.

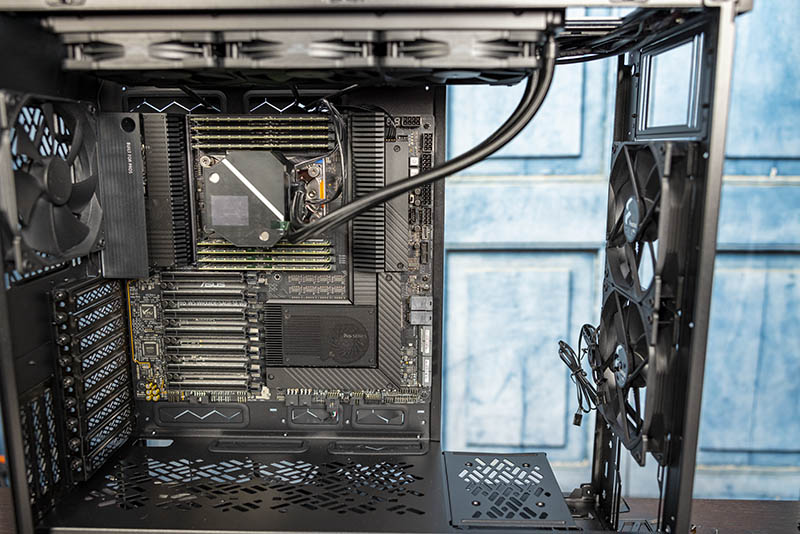

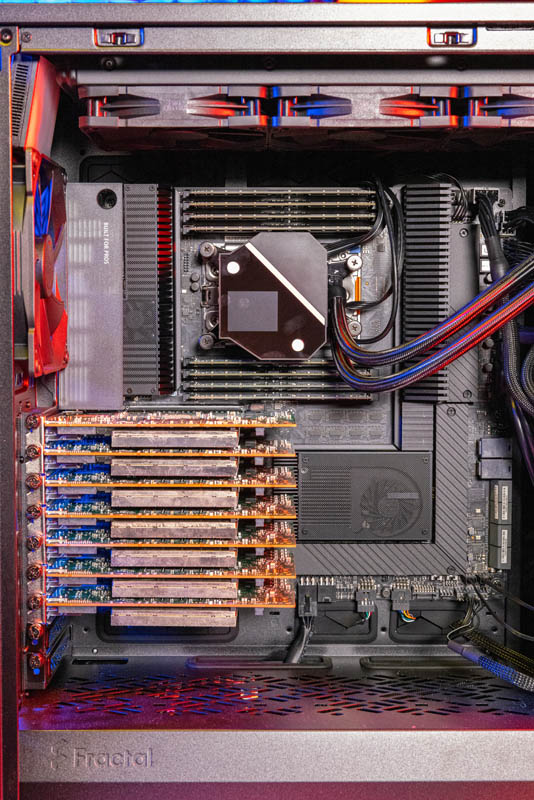

The motherboard being used is the ASUS Pro WS WRX80E-SAGE SE WiFi. This is an absolutely awesome platform for the Threadripper Pro, or basically anything unless you were looking for a low-power, inexpensive, and low-cost platform. This is meant for halo builds.

The motherboard is fitted in the Fractal Design Define 7 XL. This is an enormous chassis, but that is almost required given how large the motherboard is. Even with that, it was actually a more difficult install than one would imagine due to the motherboard size.

The cooling solution for the CPU is the ASUS Rog Ryujin 360 RGB AIO liquid cooler. The reason this is using that cooler is that in the grand scheme of the overall system cost, getting a slightly more interesting cooler seemed like it was not a big deal. It was more expensive, but in May 2021, this is also what I could get on Amazon with one-day shipping.

Here is a look with more components installed:

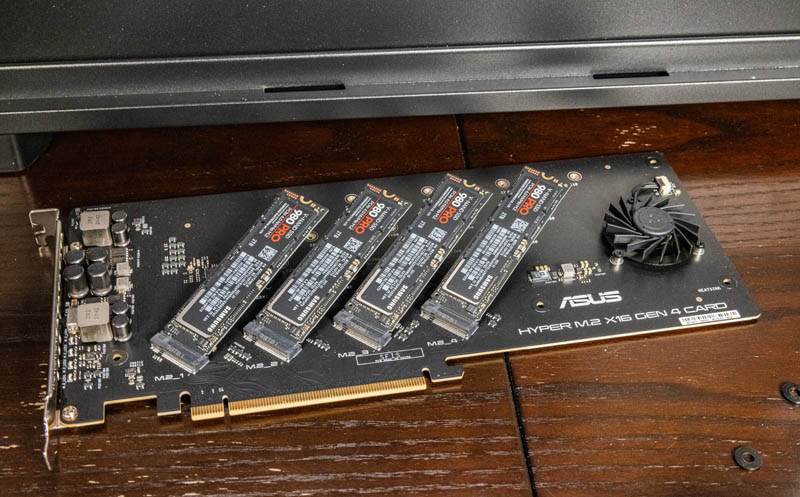

As a quick note, the original plan was for this to be a 6x DPU cluster with extra storage via Samsung 980 Pro SSDs on the Hyper M.2 x16 Gen4 card. However, if one is doing a cluster in a box, one gets more nodes by adding an extra DPU and using the onboard M.2 slots for storage. If you have seen this on STH, that is the reason. In practice, this is actually the card that replaces the 7th DPU when the system is being used.

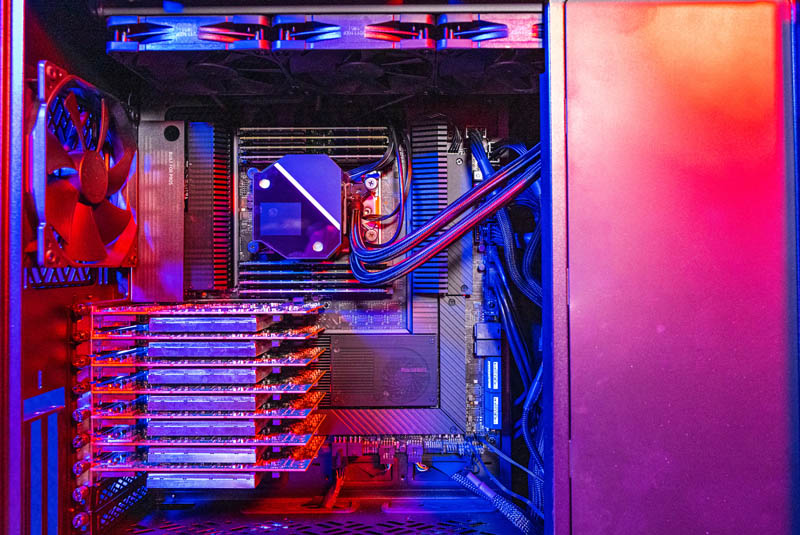

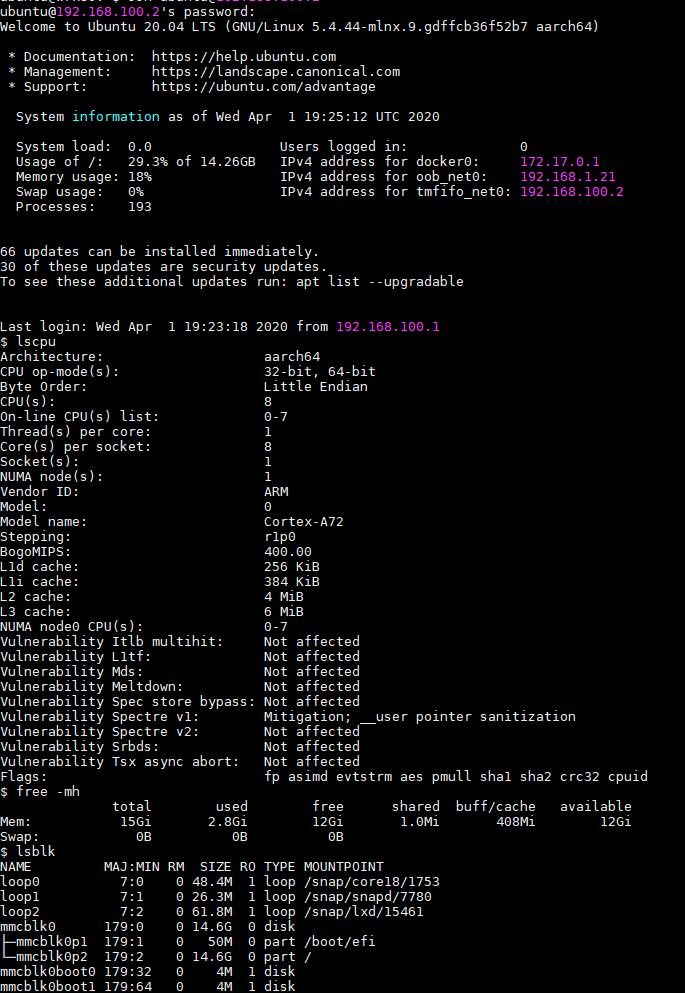

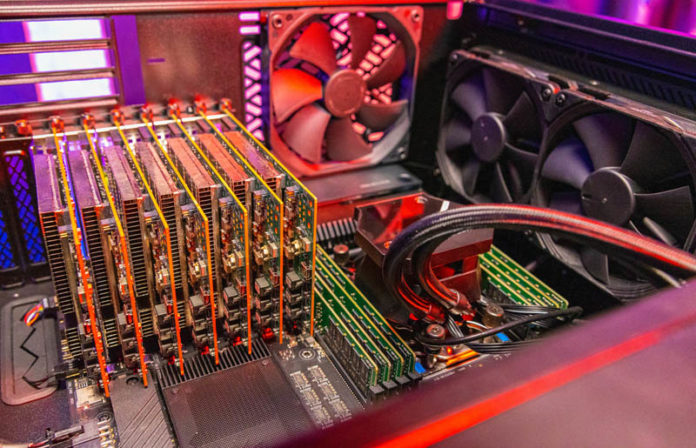

The DPUs are Mellanox NVIDIA BF2M516A units. A keen eye will note that we have two different revisions that have slight differences in the system. Each card has eight 8-core Arm Cortex A72 chips running at 2.0GHz. These, unlike the Raspberry Pi, have higher-end acceleration for things like crypto offload. We recently covered Why Acceleration Matters on STH. Other important specs for these cards are that they have 16GB of memory and 64GB of onboard flash for their OS. Since STH uses Ubuntu, these cards are running Ubuntu and the base image of our cards includes Docker so we can run containers on them out of the box.

The cards themselves have two 100Gbps network ports. Our particular cards are VPI cards. We covered what VPI means in our Mellanox ConnectX-5 VPI 100GbE and EDR InfiniBand Review. Basically, these can run either in 100GbE mode or as EDR InfiniBand. The way the cards can be configured is one of two ways. The Arm chip can either be placed as a bump-in-the-wire between the host system and the NIC ports.

One may do this for firewall, provisioning, or other applications. How we actually use them, because the eight Arm cores usually have a negative impact on network performance, is that both the host and the Arm CPU have access to the ports simultaneously. That does not put the Arm CPU in the path from the host to the NIC and usually increases performance. BlueField-2 feels very much like an early product.

We are going to quickly mention that there are lower-power and low-profile 25GbE cards as well. We did not have the full-height bracket or this one may have been used.

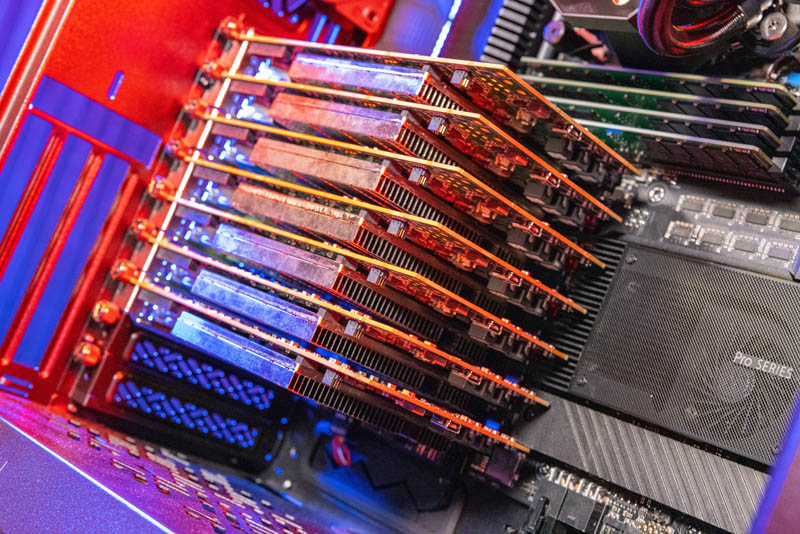

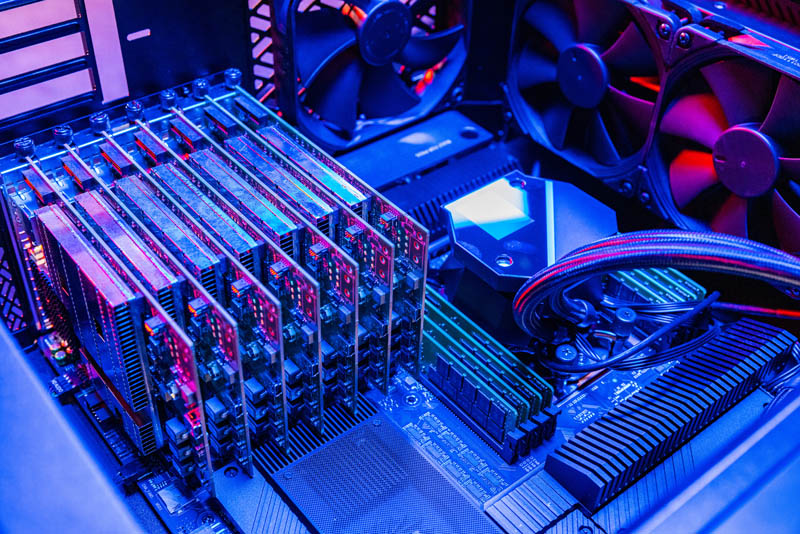

Here is a look at the seven cards stacked in the system:

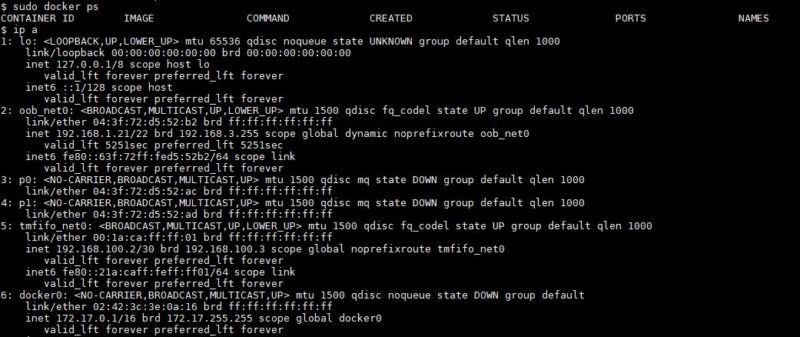

Here is the docker ps and the network configuration on a fresh BlueField-2 card. One can see that there is also an interface back to the host system as well

One other interesting point is just how much networking is on the back of this system. Here is a look:

Tallying this up:

- 2x 10Gbase-T ports (ASUS)

- 14x QSFP56/ QSFP28 100GbE ports (7x BlueField-2 cards)

- 7x Management ports (7x BlueField-2 with a shared ASUS port on a 10G NIC)

- WiFi 6

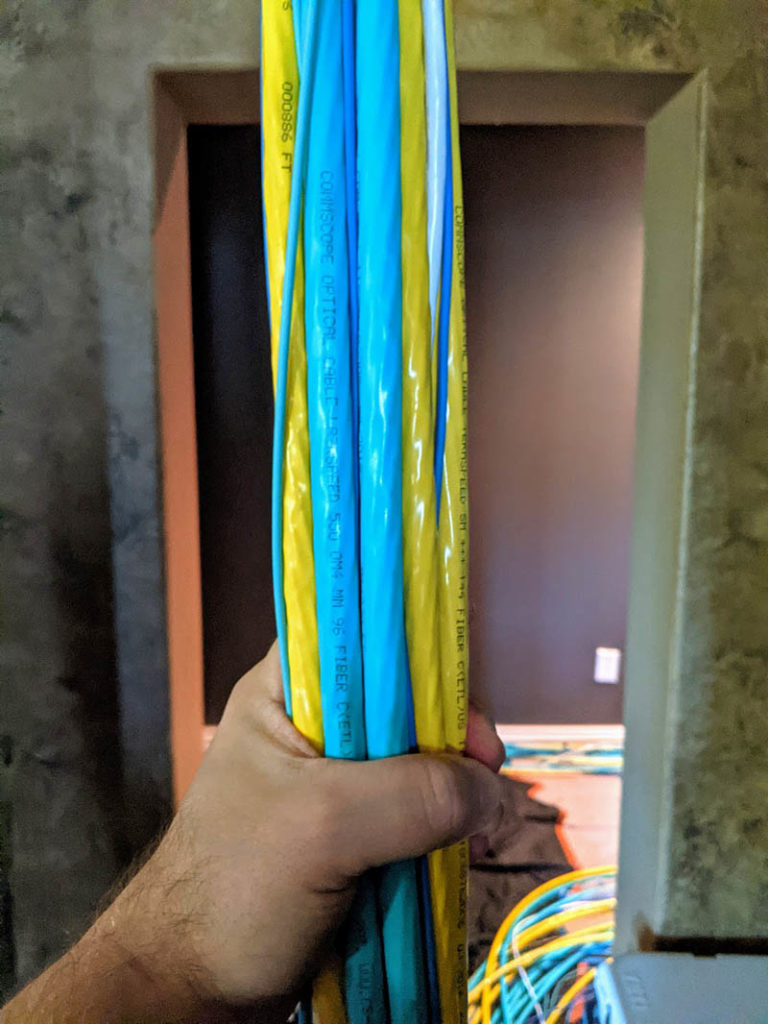

All told, we have a total of 24 network connections to make on the back of this system. That is a big reason behind why one of the next projects on STH you will see is running 1700 fibers through the home studio and offices.

With the mass of fiber installed, it is trivial to connect a system like this without having to put a loud and power-hungry switch in the studio.

Tallying Up the Solution

All told we have the following specs, minus the ASPEED baseboard management controller running the ASUS ASMB10-iKVM management:

Processors: 120 Cores/ 184 Threads

- 1x AMD Ryzen Threadripper Pro 3995WX with 64 cores and 128 threads

- 7x NVIDIA BlueField-2 8-core Arm Cortex A72 2.0GHz DPUs

RAM: 624GB

- 512GB Micron DDR4-3200 ECC RDIMMs

- 7x 16GB on DPU RAM

Storage: ~8.2TB

- 2x Micron 7400 3.84TB M.2 SSDs

- 7x 64GB DPU Storage

Networking: ~1.4Tbps

- 2x 10Gbase-T ports from the ASUS motherboard

- 14x 100G ports from BlueField-2 DPUs

- 8x out-of-band management ports (one shared)

- WiFi 6

There is a lot here, for sure and this is several steps beyond the 2013 Mini Cluster in a Box series.

Final Words

Of course, this is not something we would suggest building for yourself unless you really wanted a desktop DPU workstation. This is more of an “art of the possible” build, much like the Ultra EPYC AMD Powered Sun Ultra 24 Workstation we did. Still, this is effectively well over a 10x build versus what we did using the Intel Atom C2000 series in 2013. It is also something I personally wanted to do for a long time, so that is why it is being done now.

Again, I just wanted to say thank you to Jeff for the collaboration. I am secretly jealous of the Turing Pi 2 platform since I never manage to be able to buy one. It was very fun to do this collaboration with him, and without his nudging, this project would have been delayed even more.

This is unreal cool. I miss back when you’d do more of this type of build

I’m going to vote for more small cluster builds

That video was hilarious. Oh I’m liking the build too.

Your build it’s more expensive, but its like scaling up what JG did by 20 times and that’s more compact and usable than 20 of those pi clusters

I just wanted to say I’ve been a lurker on the site and forums for years (I think the first time I remember an STH article appearing in my searches was around 2009 or 2010). It was so fun working with Patrick on our builds, and I was definitely surprised by how much bandwidth this Threadripper-powered monstrosity can put through.

I loved the contrast of the low-end / high-end builds we did, and I hope it’s a sign of some of the fun things to come in the ARM ecosystem (or even ARM/X86 hybrid builds) over the next few years!

Does it actually keep cool under load? Esp. those 7 single slot AICs. They are going to need more of a cooling solution than several 120mm case fans blowing vaguely in their direction.

Did you manage to run linpack on the arm nodes?

Crappy “solution” in search of a problem.

Why would one need such a thing ?

One big unknown(to me): how does the tmfifo interface between the DPUs and the hosts compare to the physical network interfaces in terms of speed, latency; and CPU or other resource costs if you do load it heavily?

Is it pretty much just an alternative to using the wired out-of-band management port; and not terribly fast? Is it intended to be a match for the external 2x 100GbE interfaces to suit the bump-in-the-wire scenario and thus pretty fast indeed? Does it just let you go up to the limits of the PCIe slot, minus whatever the NICs are consuming?

If it is fast enough to be relevant is it possible for the DPUs to DMA to one another across the PCIe bus without dragging the host CPU into it; or would treating the tmfifo interfaces as an alternative to a switch for the 100GbE interfaces mean prohibitive load and/or bottlenecking as the host CPU plays software switch?

Okay, this is neat. Can you still use the 100G fiber on the x86 host or is that connectivity dedicated to the DPU when operating like this?

fuzzy and orange, those are the same questions almost. I used these at work. You don’t really use the tmfifo since you’ve got the network interfaces. You don’t need to go to the switch to hit the Arm CPUs since it’s like using MHA’s.

Really cool and unique build. I’d love to see a compact Cluster-in-a-box build that’s somewhere between “Ultimate” and a Turing Pi.

Genuinely looked like y’all both had fun doing this one

This exemplifies how terribly space-inefficient the ATX standard is. The system could handle so much more but there are only seven slots for expansion cards despite the gigantic case.

Very neat and interesting! Thank you

This looks pretty cool (not literally, given the heat). What sort of PSU are you using?

Unfortunately it doesn’t seem to be possible to actually buy those BlueField 2 cards, at least not for a homelab environment. I’d be very interested in playing with the technology.

I just bought the cards online. 850W PSU in here.

Buying the cards online doesn’t seem to be possible at least from the EU, I probably could buy them in the US and have them shipped here but customs etc. is a giant hassle.

@BB2 one use case could be containers for back-end services and storage based services for other services, with some of that storage sitting on other hosts using NVMeoF. But like others here I’m having a hard time seeing this node be able to reach anywhere near that amount of network bandwidth unless you use it as a switch or set of switches as well. Switching half the DPUs for compute accelerators could give very good utilization of everything for a very efficient scale up node.

@Patrick Kennedy: Where were you able to buy these cards? All I see is a form on NVidia’s site to have a solutions expert contact me to evaluate my project.