Recently we saw one of the more momentous shifts in marketing. NVIDIA Networking, formerly Mellanox, shifted the naming of its SmartNICs from “IPUs” to “DPUs” or Data Processing Units. It seems like the industry is moving to call these networking devices that function as mini-servers themselves into DPUs, so it is time for a primer. In this piece, we are going to discuss what is a DPU. We are then going to discuss a few of the companies we are covering in the space to show how there is a common feature set. Finally, we are going to discuss a bit about the broader market. There is certainly a ton that can be written about this space, but we wanted to give an overview to our readers who may not know what a DPU is and are hearing the term used more often.

What is a DPU: The Video

If you want to get this perspective via a background listening, here is a video:

Our suggestion is to open this up in a different tab and watch on YouTube versus the embed here.

What is a DPU: Background

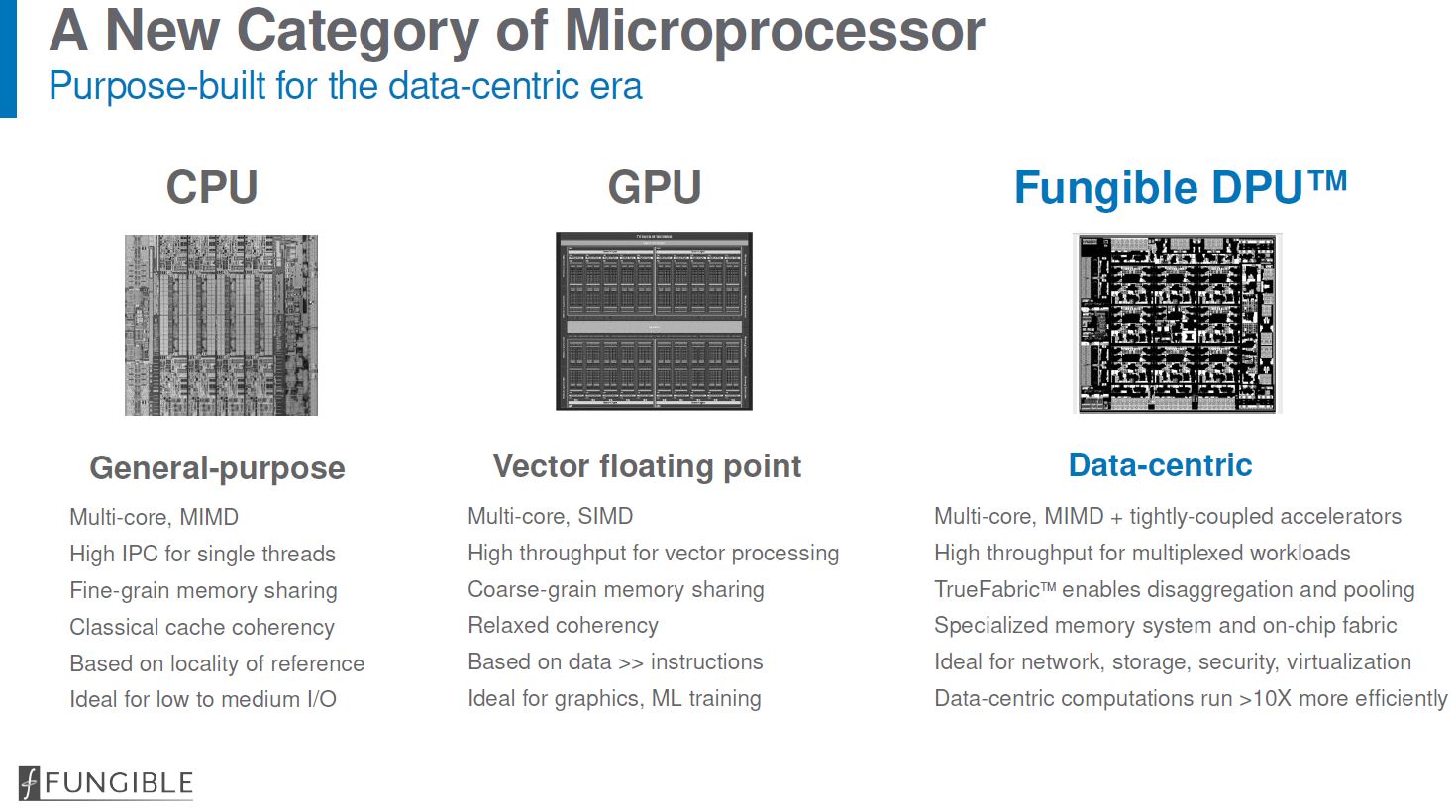

The DPU, or Data Processing Unit, is focused on moving data within the data center. While a CPU and GPU are focused on compute, the DPU is in many ways optimized for data movement.

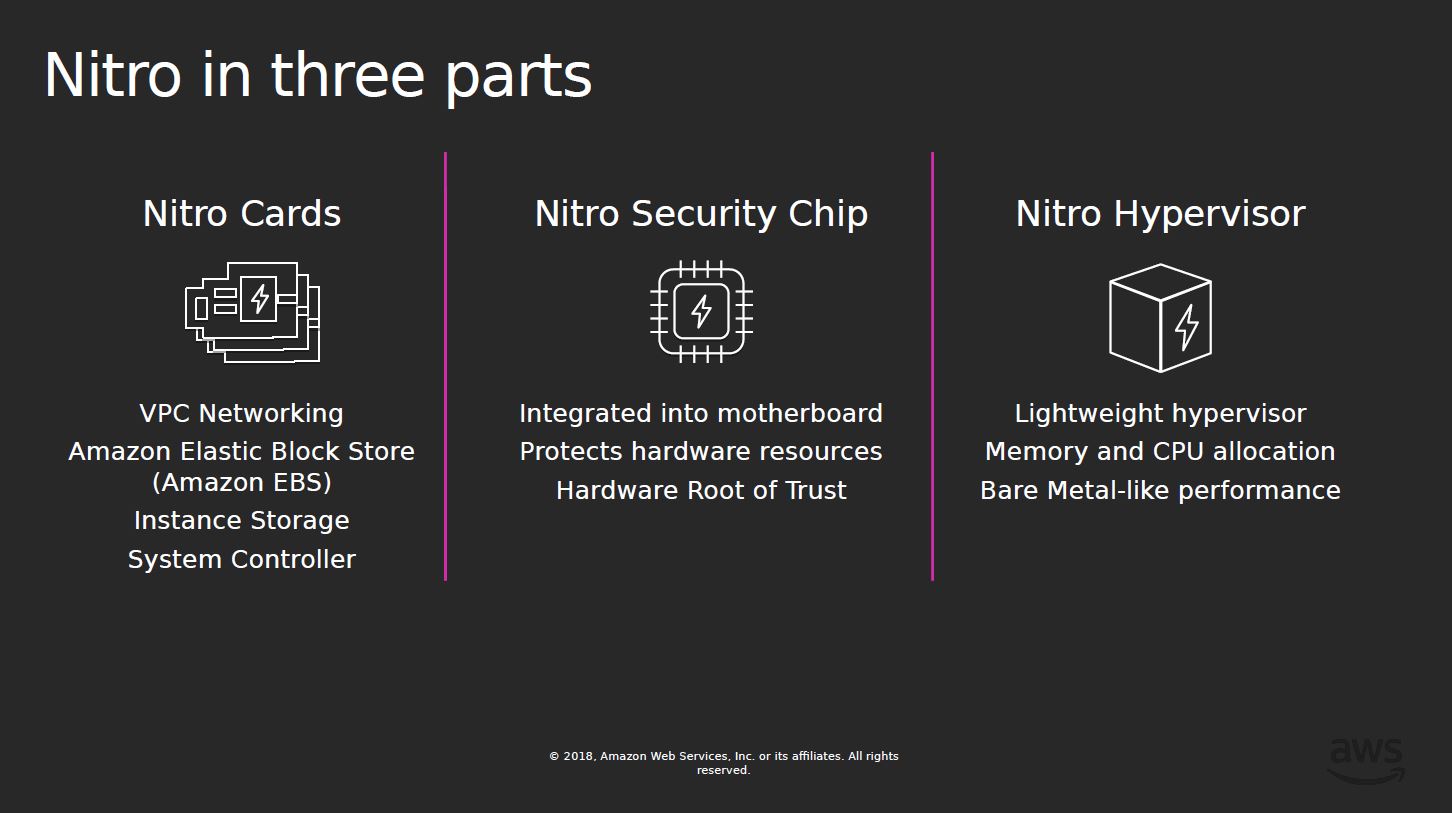

Amazon AWS was really a pioneer in this space. AWS Nitro was an early SmartNIC design that focused on bringing secure networking, storage, security, and a system controller to the AWS ecosystem.

Ever since AWS started making major headway managing systems using Nitro, and iterating on capabilities over the years, others in the industry have looked to emulate and generalize to a broader audience.

While many other features may be present on a DPU, the common features of DPU chips seem to be:

- High-speed networking connectivity (usually multiple 100Gbps-200Gbps interfaces in this generation)

- High-speed packet processing with specific acceleration and often programmable logic (P4/ P4-like is common)

- A CPU core complex (often Arm or MIPS based in this generation)

- Memory controllers (commonly DDR4 but we also see HBM and DDR5 support)

- Accelerators (often for crypto or storage offload)

- PCIe Gen4 lanes (run as either root or endpoints)

- Security and management features (offering a hardware root of trust as an example)

- Runs its own OS separate from a host system (commonly Linux, but the subject of VMware Project Monterey ESXi on Arm as another example)

With that basic anatomy, DPUs are able to do some interesting things.

On the networking side, secure connectivity can be offloaded to a DPU so that the host sees a NIC but extra security layers are obfuscated. This makes a lot of sense in terms of AWS proprietary networking that it does not necessarily want to expose the details of to its multi-tenant systems. One can also offload OVS (Open Virtual Switch), firewalls, or other applications that require high-speed packet processing.

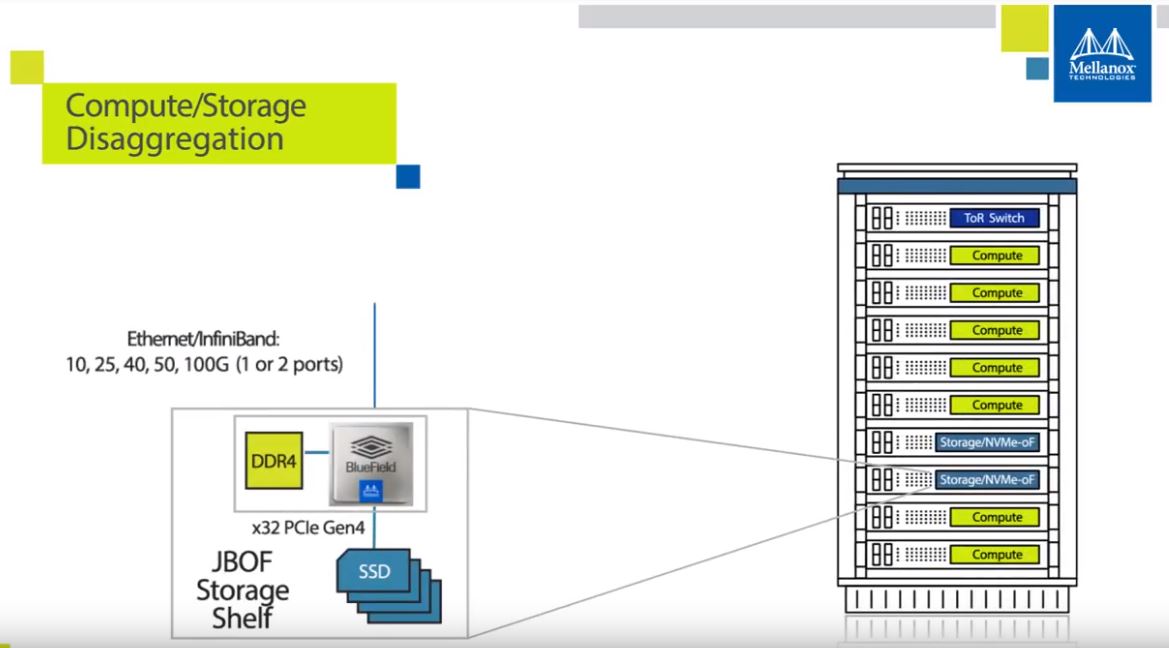

For storage, one can present the DPU in a host system as a standard NVMe device, but then on the DPU manage a NVMeoF solution where the actual target storage can be located on other servers in other parts of the data center. Likewise, since the DPUs usually have PCIe root capability, NVMe SSDs can be directly connected to the DPU and then exposed over the networking fabric for other nodes in the data center, all without traditional host servers.

For compute, by running a separate hypervisor on the PCIe device, the x86 or GPU compute becomes just another type of resource in the data center. Instances can be built using resources at the DPU level and GPUs from multiple servers spaned. Again, one can also attach GPUs directly to DPUs to expose them to the network. The NVIDIA EGX A100 shows what that vision can be. One can also then provision an entire bare metal server with the DPU being the enclave of the infrastructure provider, and the remainder of the server being the saleable unit. A DPU can also assist in delivering and metering network, storage, and accelerator resources to that server.

These are just some of the easy examples of how DPUs can be used in the near term. We wanted to take a look at some of the current DPU solutions out there and show some similarities and differences.

Three Current DPU Solutions

Next, we wanted to take a brief look at the block diagrams of three solutions. First, we are going to look at the Fungible F1. Then we are going to look at the NVIDIA-Mellanox BlueField-2. Last, we are going to look at the Pensando Elba solution. These three are very different but show the basic functionality and how industry players are innovating.

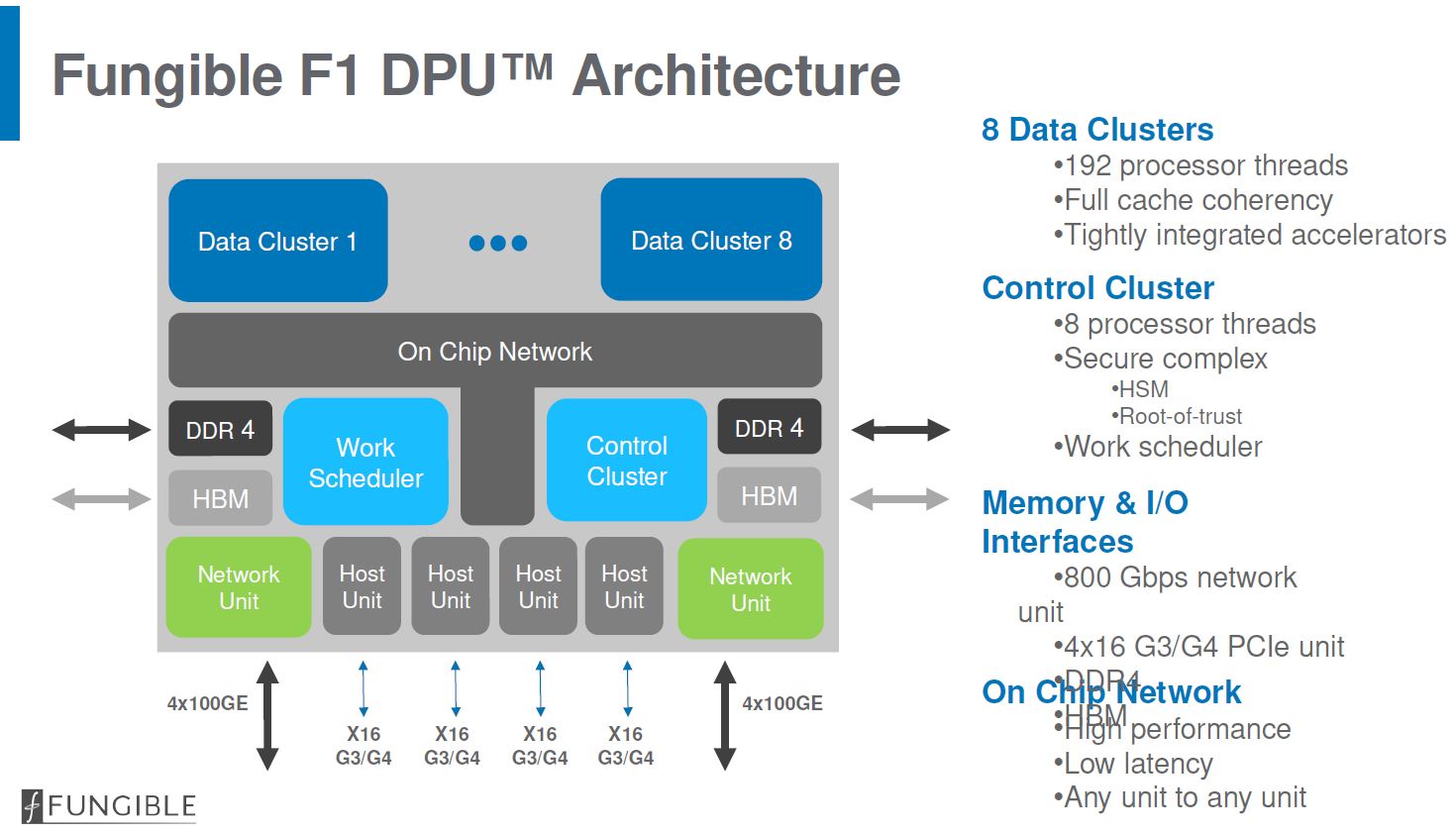

Fungible F1 DPU Block Diagram

First, we are going to start with the Fungible F1 DPU. We are going to limit this discussion to the basics of the block diagram, but if you want to read more about it, check out our Fungible F1 DPU for Distributed Compute article.

Here we can see the basic building blocks we noted above. First, there are two 400Gbps network units that deliver up to 800Gbps in aggregate and can handle 8x 100GbE interfaces for high-speed networking.

- High-speed networking connectivity – Here we can see the basic building blocks we noted above. First, there are two 400Gbps network units that deliver up to 800Gbps in aggregate and can handle 8x 100GbE interfaces for high-speed networking

- High-speed packet processing with specific acceleration and often programmable logic – Fungible uses a P4-like language that does parsing, encapsulation, decapsulation, lookup and transmit/ receive acceleration

- A CPU core complex – Here Fungible is using 8 data clusters of 4x SMT MIPS-64 cores

- Memory controllers – Fungible has two DDR4 controllers as well as 8GB HBM2 support

- Accelerators – Fungible has a number of accelerators including those for data movement

- PCIe Gen4 lanes – The F1 DPU has four x16 host units that can run as either root or endpoints

- Security and management features – The F1 Control Cluster has a 4 core x 2-way SMT control cluster that has a secure enclave with secure boot and a hardware root of trust. It also has features such as crypto engines and random number generation functions

- Runs its own OS separate from a host system – The Control Plane for the F1 DPU runs on Linux

As you can see above, the Fungible F1 has DPU features across all of the areas we identified earlier.

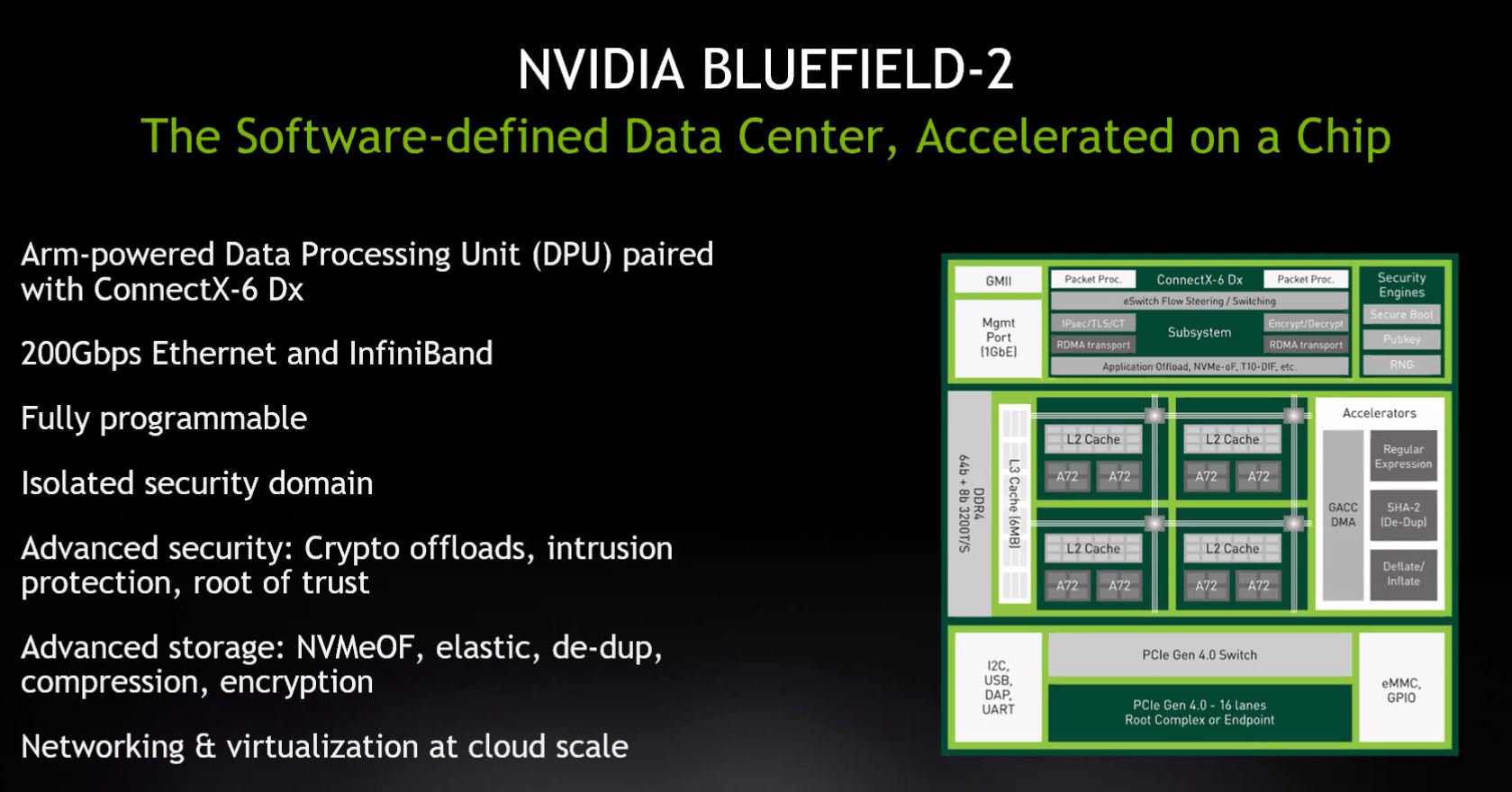

NVIDIA BlueField-2 Block Diagram

Next, let us move to the unit we have covered the most, both in the first-gen Mellanox BlueField, and the NVMeoF SoC Solution that we saw used in a MiTAC HillTop NVMeoF JBOF Storage Powered by Mellanox BlueField. We also covered the Mellanox Bluefield-2 launch all before it became NVIDIA Networking.

Again let us go through the basic building blocks:

- High-speed networking connectivity – Mellanox is using ConnectX-6 Dx based networking for up to 2x100Gbps or 1x 200Gbps Ethernet or Infiniband

- High-speed packet processing with specific acceleration and often programmable logic – As part of this, we get many of the offload engines and eSwitch flow logic we are accustomed to in ConnectX-6 Dx solutions

- A CPU core complex – Here we have eight Arm A72 cores

- Memory controllers – Here we get DDR4-3200 memory support. Current cards have 8GB or 16GB of memory

- Accelerators – There are additional accelerators for regex, dedupe, and compression algorithms as well as crypto offloads.

- PCIe Gen4 lanes – We get a 16 lane PCIe Gen3/4 PCIe switch

- Security and management features – The control plane has featured here such as a hardware root of trust. These systems also have a 1GbE interface for out-of-band management as is common for server BMCs.

- Runs its own OS separate from a host system – BlueField-2 runs many Linux distributions such as Ubuntu, CentOS, Yocoto, and we have seen VMware EXSi running on this as well.

NVIDIA BlueField-2 is probably one of the smaller solutions in this space, but it also has a number of commercially available form factors, is on its second major generation, and so probably has a leg up in terms of actual deployments. We should remember looking at BlueField-2 that it was launched before the new Fungible and Pensando chips here were launched so it is almost like comparing a different generation of DPU.

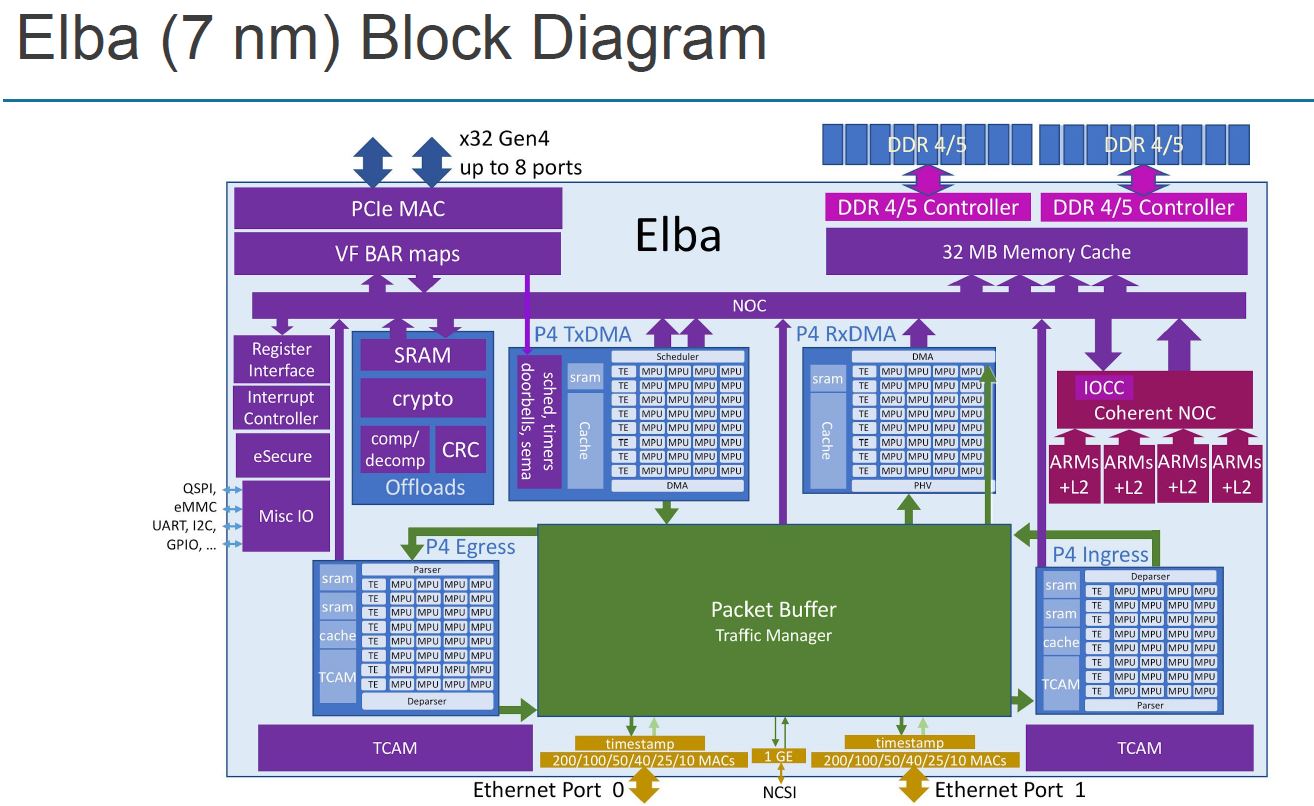

Pensando Elba Block Diagram

Pensando has a well-known former Cisco team behind it. Like the other two solutions, it is a new processor coming from Networking-oriented folks.

Here is how the Pensando Elba design aligns to our DPU pillars:

- High-speed networking connectivity – Pensando has two 200Gbps MACs which can allow for up to 2x 200GbE networking.

- High-speed packet processing with specific acceleration and often programmable logic – The Elba DPU is utilizing a P4 programmable path to align with the popular language in the networking space.

- A CPU core complex – Here we a complex again of sixteen Arm A72 core complexes

- Memory controllers – Here we get dual-channel DDR4/ DDR5 memory support with 8-64GB. The previous generation utilized HBM but Pensando is using DDR now to save costs and make the design more flexible since one can use slotted memory as well.

- Accelerators – There are additional accelerators cryptography, compression, and data movement among others. This is beyond the P4 network complex

- PCIe Gen4 lanes – We get a up to 32x PCIe Gen4 lanes and 8 ports (for example for 8x x4 NVMe SSDs)

- Security and management features – The control plane has featured here such as a hardware root of trust. These chips also have a 1GbE NCSI interface for out-of-band management.

- Runs its own OS separate from a host system – Pensando says this runs Linux with DPDK support. VMware is also prioritizing this along with the NVIDIA BlueField-2 as VMware Project Monterey.

For those wondering why Pensando is making such a big deal about P4, especially if you are coming from the server/ storage space instead of networking, P4 is very common for programmable switches as an example. We have been following the Innovium TERALYNX products that also use P4 which hopefully can help draw some connections.

Final Words

The DPU is the opportunity for all of the teams who have worked on the networking side of the data center world to get a seat at the table inside servers with their own processor. To many of these networking teams, they simplistically see data centers as data flows between where the data is stored, and where it is being processed, along with how to route the traffic. It is little wonder that the DPU solutions are being spearheaded by teams accustomed to data center networking infrastructure.

We will quickly note that companies like Intel and Xilinx are coming out with offerings in this space as well and have been used by many early SmartNIC deployments, especially as FPGA fabrics were used as flexible acceleration tools. Unlike some of the solutions mentioned above, aside from BlueField, we have even had hands-on time with a Supermicro SYS-1019P-FHN2T with Intel PAC N3000 included.

In our estimate, we are still early in the days of the DPU. While the PCIe Gen4 DPUs that we are seeing today are surely interesting, the 2022 generation has the potential to be disruptive. As we covered in The 2021 Intel Ice Pickle How 2021 Will be Crunch Time, we see the PCIe Gen5 and CXL generations of servers being transformational with the ability to do load stores across devices. One can easily see how a data processing unit, or DPU would immediately benefit by being able to directly access GPU memory, a SSD cache DRAM, or persistent memory modules in a CPU socket. What we have today are the DPUs that are a generation or two before that becomes a reality, but the folks making DPUs today are clearly posturing current products for that future server architecture.

I’m worried about the day Intel, NVIDIA or someone else hires Patrick to be the head of DC marketing. Dude is so far ahead of the analysts and press in data center.

I’ve found this to be a great primer after seeing the VMware news today.

More aptly titled: Patrick Explains the DPU and then gives a head-to-head comparison of the architectures of 3 prominent solutions.

Ya’ll always do this. It’s like the anti-bait. You say “oh we’re doing this little thing” then you’ve got this extra layer of detail underneath.

Why do most vid/pic previews have you with a furrowed brow and confusion?

Ha @QuinnN77 and @RunsakAL

Tozmo – I am often confused.

@Tozmo – if we look at youtube, most social-media top-dogs have extreme expressions in the thumbnails. There is a correlation between extreme expressions and the “click”.

@Patrick, try doing a jaw-drop thumbnail for a future vid, as low as you can drop the better, watch the click ratio go through the roof!

Bw, a great video! Loving these educational vids! Listening in the background whilst changing nappies works great.

So as a lurker here on STH I just want to say just reading your site has lead me to learn a fair bit in this space.

Your reviews and analysis in almost every subject related a both easy to read and informative so thanks for that! With that said I sometimes get a bit lost as I’m still new to this lol.

But the question I have regarding these devices specifically as a novice with basic understanding, is “Are these devices supplements to integrated/expansion networking devices?”

You would use these as an alternative to say ConnectX5x to add a thoroughly secure and controlled network-oriented Co-Processor pathway?

“What is a DPU?” What is a “DPU”. The article goes on to explain what a “DPU” is, but so far it has just described what is essentially an I/O PROCESSOR. WHY DOES HUMANITY REALLY NEED TO CALL AN I/O PROCESSOR A “DATA PROCESSING UNIT”?! Do we need to go back to mainframe computer school? I mean, why the need for yet another “class” to drop microprocessors into? Why? It’s a fr@cking I/O processor! Also, what is “data”? Is “data” a specific thing or is it a more general term? What makes a “data processor” somehow different from an “intelligence processor” or a “graphics processor” or a “central processor”, other than the location of the thing and what it’s doing?

Depending on your point of view, “data processing” can be literally anything that’s done on a computer. Rendering video can be thought of as “data processing”. So can recording a podcast, drawing a picture, watching a YouTube video, composing a letter in a word processor and building a spreadsheet database of your yearly expenses. Merely calling an I/O processor a “data processor” is not very clear as any kind of transmitted information, as far as the computers are concerned, is “data”. Irrespective of the application or usage case, a computer treats all things stored and accessed on it as “data”, even when there is a clear distinction between video data, audio data and some other kind of data. And of course, “data” is distinct from “code” EVEN THOUGH both look like random binary symbols in a plaintext editor. What marketing rubbish, “DPU”. Y’all just tryin’ to get me to buy your stuff, which ain’t NEVER gonna happen as I have not the money nor the need and desire.

NVidia also changed silently the meaning of DPU in several announcements (for example https://hotchips.org/advance-program/). They are talking now about: NVIDIA DATA Center Processing Unit (DPU) Architecture ;-). Look at the addition of CENTER! So its not DATA Processing Unit but now Data center Processing Unit 🙂

Looks like they also moved up a little to the (DC) Infrastructure side as main use case as Intel already did… LOL 🙂