Getting the NVIDIA BlueField-2 DPU Ready

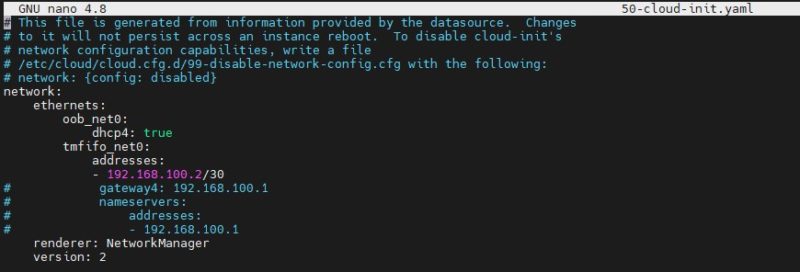

BlueField-2 DPUs have many quirks. One of them is just how many ways there are to get access. We have both 25GbE and 100GbE versions from early 2021, and all of them are set up similarly from the factory. NVIDIA is rapidly iterating on the software stack so this may change. Still, out of the box one can use serial/ USB to configure the DPUs, or use a network interface. There is an oob_net0 interface that connects to the DPU’s is the one we are after, and on our units, this defaults to dhcp4 for an address. Perfect! The challenge is that tmfifo is the interface with the interface used to talk to a host machine over the PCIe interface. For many, this is not the interface you will want to use. The gateway on this means that it will default to try going through the host machine for the network, and that is not what we want. There is an out-of-band management interface, so we want to use that. A quick trick is that you can just use netplan and comment out the gateway/ nameservers lines for tmfifo_net0 and then everything will work.

With that change, we can still ssh into the card on 192.168.10.2, but we are now basically using the OOB management port to access the DPU. You can also remove the tmfifo interface if you do not want it for security reasons.

The next step is always to update. This is Ubuntu 20.04 LTS on the card, and it uses a sh shell by default (which sucks, please give us bash as a default NVIDIA.) Docker is also pre-installed. So what we can do now is to update the DPU using apt-get update and apt-get upgrade. Software evolves rapidly, so this is a step I highly suggest. Please note, that this is unlikely to be a 30-second process. These are Arm Cortex A72 cores, so go grab a coffee. With our little fleet of machines, the top two reasons we have had issues updating are that the clock reset to 1970 so none of the repos were valid. We usually just use timedatectl to set time properly.

Something we are going to quickly note is that we are using Ubuntu. NVIDIA has a CentOS 8 set of images that are basically not recommended now with IBM-Red Hat Bidding Farewell to CentOS. There are also RHEL and Debian instances. There are tools to roll your own OS and that may be easier than updating everything on these DPUs, especially if you have an older card.

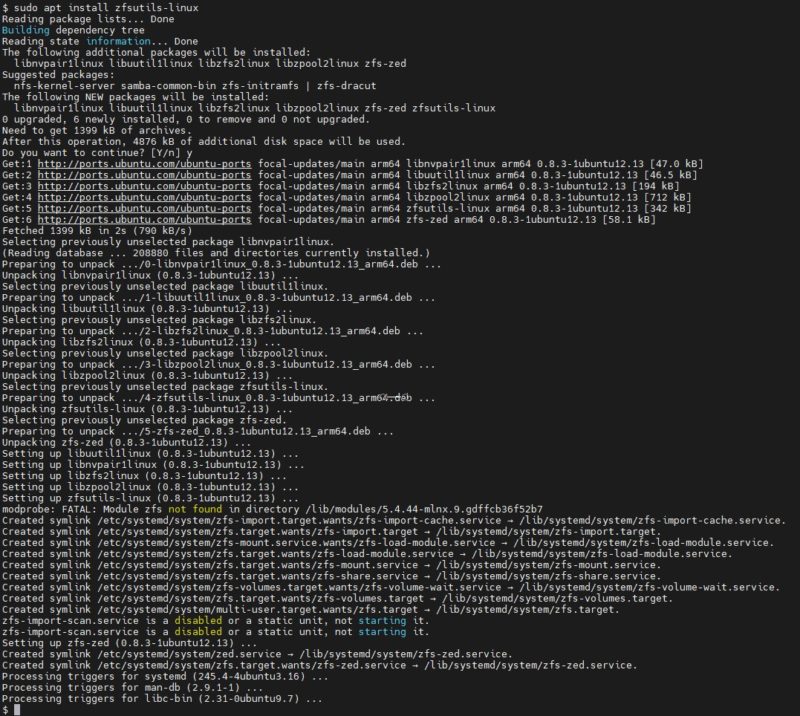

The next step is that you can install ZFS using sudo apt install zfsutils-linux and it works very easily here:

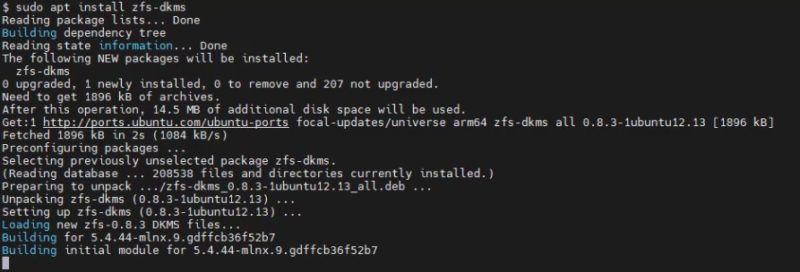

Although this is Ubuntu, since this is the Arm/ BlueField version, we need to also install zfs-dkms via sudo apt install zfs-dkmsAs you can see here:

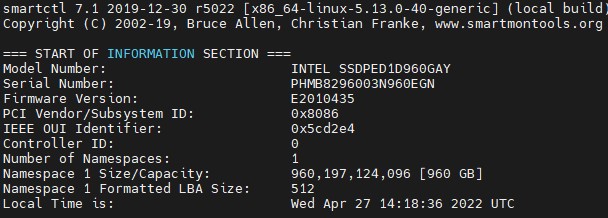

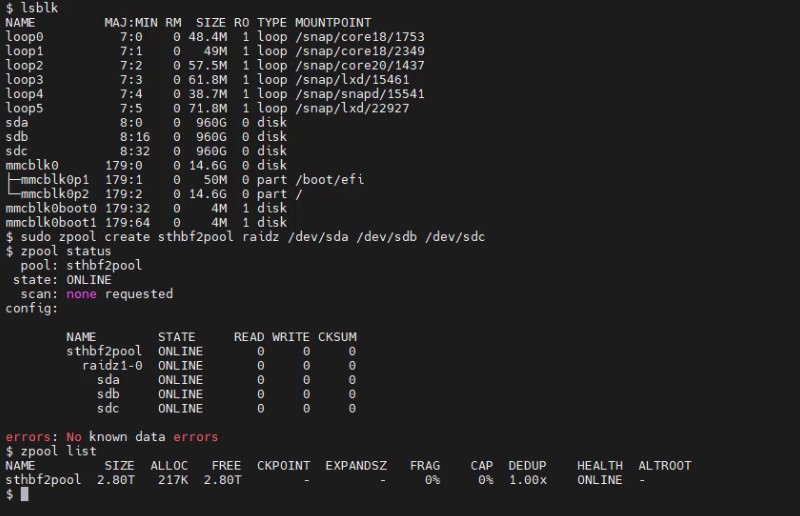

Now we are going to use the three 960GB add-in-card SSDs in the AIC JBOX to create a little ZFS RAID array.

We are going to take our 3x 960GB SSDs and make sthbf2pool (yes that is STH BlueField-2 pool) using zpool create raidz. Some may pick up on the little extra credit work that is going on here. Again, this is being done to show a concept, not as a production array so we are keeping this simple.

So at this point, our BlueField-2 DPU has an array of three SSDs that it has a RAID-Z array on. It is also not using the host x86 system here, this is all being done on the DPU’s Arm cores. This is a ZFS RAID array being run completely on BlueField.

very nice piece of writing.

now i understand the concept of DPU.

waiting for next part.

You’ve sold me on DPU for some network tasks, Patrick.

This article… two DPUs to export three SSDs. And, don’t look over there, the host server is just, uh, HOSTING. Nothing to see there.

How much does a DPU cost again?

Jen Sen should just bite the bullet and package these things as… servers. Or y’all should stop saying they do not require servers.

This is amazing. I’m one of those people that just thought of a DPU as a high-end NIC. It’s a contorted demo, but it’s relatable. I’d say you’ve done a great service

Okay, that was fun. Does nVidia have tools available to make their DPUs export storage to host servers via NVMe (or whatever) over PCIe? Could you, say, attach Ceph RDB volumes on the Bluefield and have them appear as hardware storage devices to host machines?

Chelsio is obviously touting something similar with their new boards.

SNAP is the tools and there’s DOCA. Don’t forget that this is only talkin’ about the storage, not all the firewalls that are running on this and it’s not using VMware Monty either.

Scott: Yup! one of the BlueField-2’s primary use cases is “NVMEoF storage target” – there are a couple of SKUs explicitly designed for that purpose, which don’t need a PCIe switch or any weird fiddly reconfiguration to directly connect to an endpoint device (though it’d be a bit silly to only attach 4 PCIe 4.0 x4 SSDs to it!)

Scott: Ah, just realized I misread – but yes, that’s one of the *other* primary use cases for BF2, taking remote NVMEoF / other block devices and presenting them to the host system as a local NVMe device. This is how AWS’s Nitro storage card works, they’re just using a custom card/SoC for it (the 2x25G Nitro network and storage cards use the same SoC as the the MikroTik CCR2004-1G-2XS-PCIe, so that could be fun…)

I think that mikrotik uses an annapurna chip but its more like the ones they use for disk storage not the full Nitro cards being used today. It doesn’t have enough bandwidth for a new Nitro card.

I would like to see a test of the crypto acceleration – does it work with Linux LUKS?

Hope we will arrive at two DPUs both seeing the same SSDs. Then ZFS failover is possible and you could export ZFS block devices however you like or do an NFS export. Endless possibilities like, well, a server The real power of a DPU only comes into play when the offload funktionalitet comes into play.

Did you need to set any additional mlxconfig options to get this too work?

Any other tweaks required to get the BlueField to recognize the additional NVMe devices?