At GTC 2021, we already covered a number of GPU-related announcements, but we did not cover the new NVIDIA A30. The reason for this is that the A30 is something we find particularly fascinating. You can see our NVIDIA A10 A16 A4000 and A5000 Launched piece for those. In this article, we wanted to discuss the NVIDIA A30.

NVIDIA A30 Data Center GPU

What makes the NVIDIA A30 so interesting is that it is effectively a lower-performance NVIDIA A100. The NVIDIA A100 is the company’s flagship, however, not every data center can handle, nor needs, 250-500W GPUs (A100 PCIe to 80GB SXM4.) With other cards such as the NVIDIA A16, the company is targeting a largely different style of card. With the NVIDIA A30, we get a card that is more similar to a less well-featured A100.

Here are the key specs for the NVIDIA A30 GPU:

| Peak FP64 | 5.2TF |

| Peak FP64 Tensor Core | 10.3 TF |

| Peak FP32 | 10.3 TF |

| TF32 Tensor Core | 82 TF | 165 TF* |

| BFLOAT16 Tensor Core | 165 TF | 330 TF* |

| Peak FP16 Tensor Core | 165 TF | 330 TF* |

| Peak INT8 Tensor Core | 330 TOPS | 661 TOPS* |

| Peak INT4 Tensor Core | 661 TOPS | 1321 TOPS* |

|

Media engines |

1 optical flow accelerator (OFA) 1 JPEG decoder (NVJPEG)

4 Video decoders (NVDEC) |

| GPU Memory | 24GB HBM2 |

| GPU Memory Bandwidth | 933GB/s |

|

Interconnect |

PCIe Gen4: 64GB/s

Third-gen NVIDIA® NVLINK® 200GB/s** |

| Form Factor | 2-slot, full height, full length (FHFL) |

| Max thermal design power (TDP) | 165W |

| Multi-Instance GPU (MIG) | 4 MIGs @ 6GB each 2 MIGs @ 12GB each 1 MIGs @ 24GB |

| Virtual GPU (vGPU) software support | NVIDIA AI Enterprise for VMware

NVIDIA Virtual Compute Server |

Unlike the new A4000 and A5000 GPUs, this card does not have display outputs. Unlike the A10, this card is a dual-slot model. With only 165W TDP, that means that a system only needs to effectively have 82.5W TDP per slot for cooling.

With the A10, there is an enormous jump from the T4 to A10 in terms of single slot GPU power consumption. With the A30, we do not get the same low-profile and single slot, but we get a largely similar TDP per slot as we did in the T4 generation.

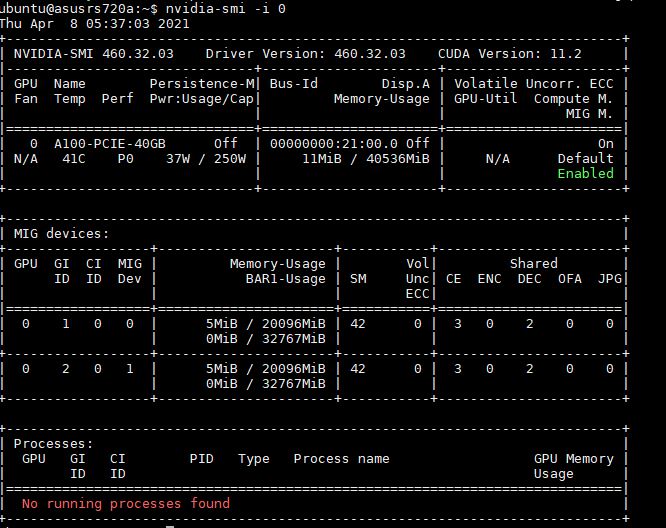

Furthermore, this is an Ampere generation part that has HBM2 memory which makes it a higher-performing part on the spectrum. For those that want to replace T4’s with a newer card, or two cards for inferencing, the other interesting feature is that we get MIG or multi-instance GPU support. Each A30 can be a single 24GB GPU, or it can present itself as four 6GB GPUs or two 12GB GPUs. We showed MIG functionality at work in our recent ASUS RS720A-E11-RS24U review. Here is where we split an A100 into two 20GB instances.

The lower TDP helps bring this technology as well as Ampere with HBM2 to a lower power level.

Final Words

The major impact with the NVIDIA A30 is that by providing many high-end features at a lower TDP level it can be used in more systems. Cooling a 165W TDP dual-slot PCIe card is easier on a system than a 250W TDP card. As such, this expands the potential market where the new GPUs can be used in systems opening up new use cases to bring GPU inferencing to new environments.

For other STH GTC coverage, you can see the main site or learn a bit more about the big NVIDIA Grace and BlueField DPU announcements. We also have a video here on those:

And what’s the expected price of A30?