Recently, there has been a lot of confusion in the industry around what is a DPU versus a SmartNIC, or data processing unit. One of the key challenges here is that marketing organizations are chasing buzzwords and in some cases avoiding buzzwords which makes comparisons difficult. We are introducing the STH NIC Continuum in its first draft Q2 2021 edition to show how we are going to be classifying NICs at STH. We do a large number of NIC, server, and switch reviews in the industry, so we simply need a framework to discuss types of NICs, and that is what we have today.

What is a DPU?

Last year, we had a piece What is a DPU A Data Processing Unit Quick Primer. That had an accompanying video:

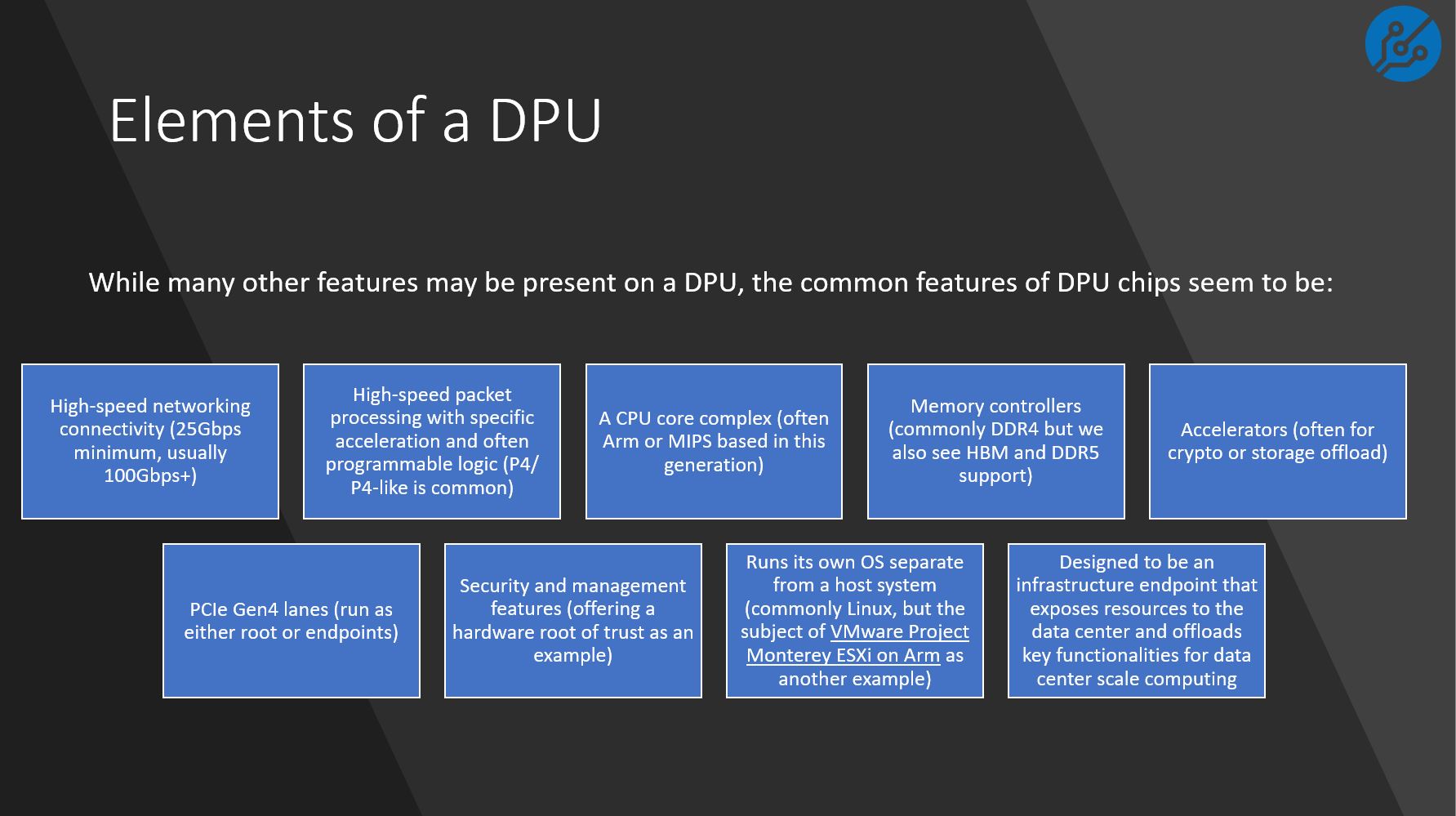

In that piece, we discussed some of the key characteristics that DPUs share. Among them are:

- High-speed networking connectivity (usually multiple 100Gbps-200Gbps interfaces in this generation)

- High-speed packet processing with specific acceleration and often programmable logic (P4/ P4-like is common)

- A CPU core complex (often Arm or MIPS based in this generation)

- Memory controllers (commonly DDR4 but we also see HBM and DDR5 support)

- Accelerators (often for crypto or storage offload)

- PCIe Gen4 lanes (run as either root or endpoints)

- Security and management features (offering a hardware root of trust as an example)

- Runs its own OS separate from a host system (commonly Linux, but the subject of VMware Project Monterey ESXi on Arm as another example)

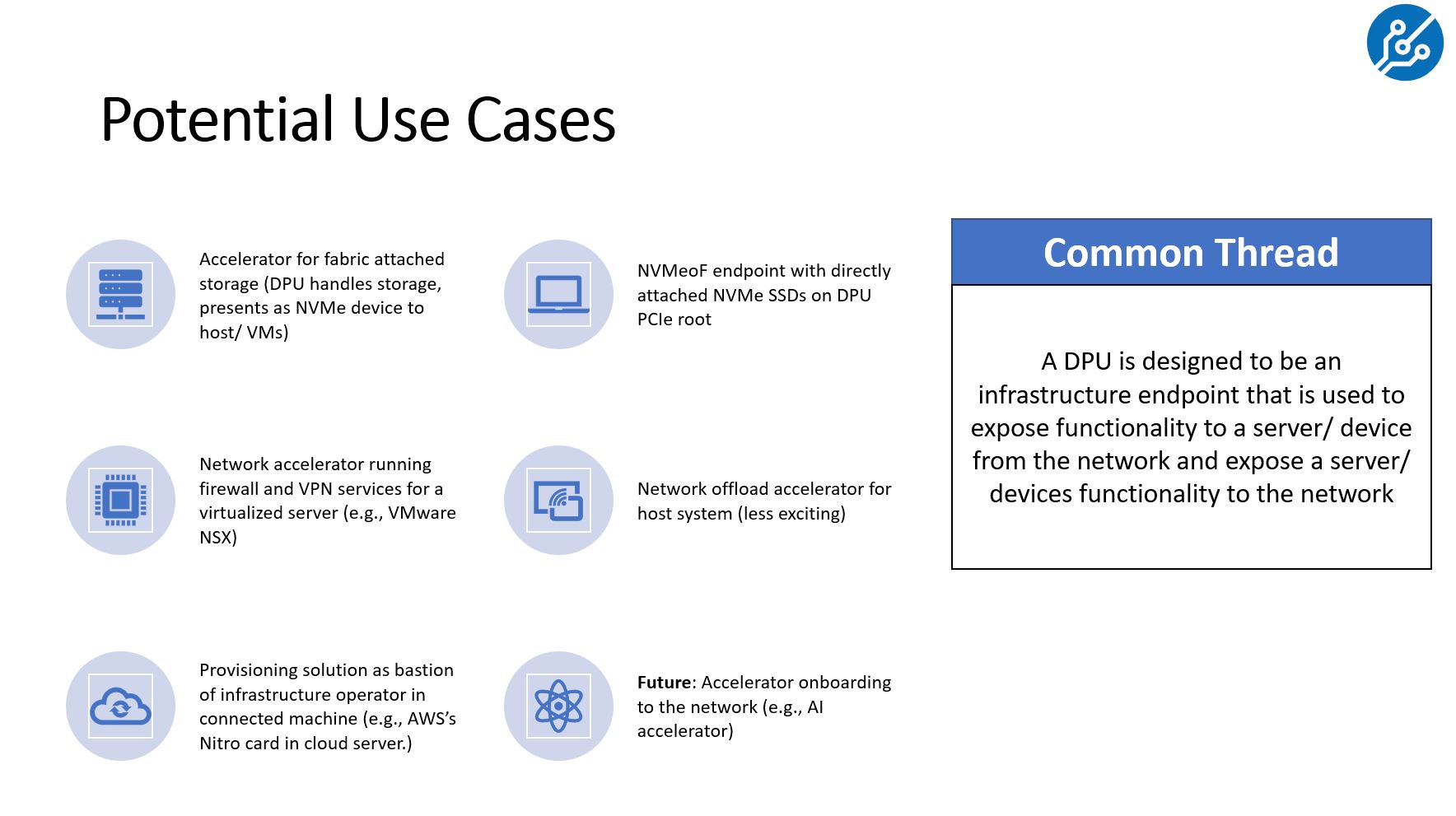

The common theme though is that a DPU is designed for disaggregating the infrastructure and application resources in the data center. The DPU is designed to be an infrastructure endpoint that both exposes network services to a server and to devices and at the same time securely exposes the server and device capabilities to the broader infrastructure.

With that framing, now we are going to introduce a framework for discussing SmartNIC versus DPU since that is an area of confusion.

SmartNIC vs DPU

One of the biggest questions we are asked, and see vendors struggle with is how to classify SmartNIC vs DPU vs FPGA-based solutions. As such we have put together a draft of what we see as the common points going across the industry.

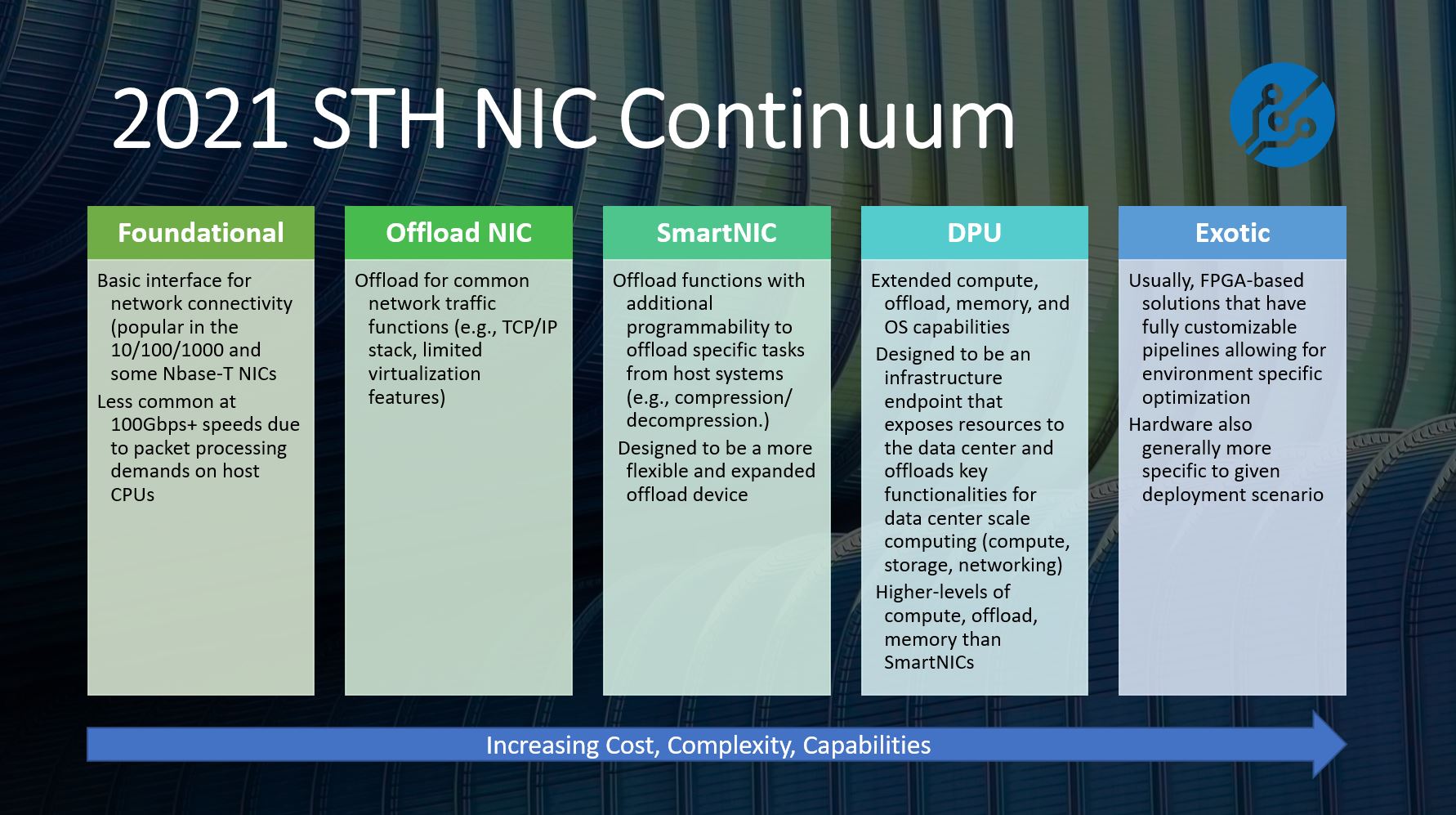

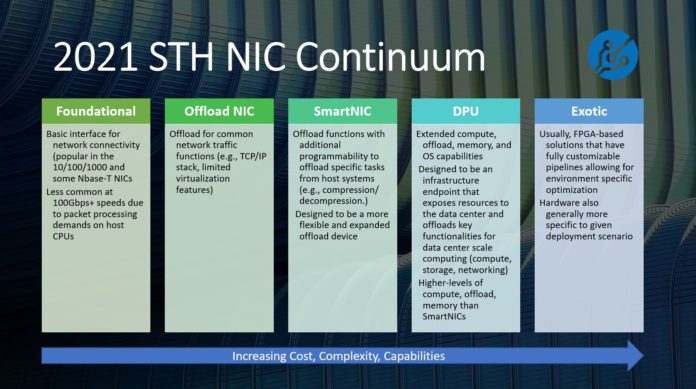

With that, here is the first 2021 STH NIC Continuum. This is something we expect to update over time, but the goal is to provide some framing on the question of what is an offload NIC vs SmartNIC vs DPU, and what constitutes a more exotic solution, usually based on FPGAs. Many vendors use these terms interchangeably, so we needed a structure to discuss solutions on STH.

Starting with the Foundational NIC, this is really the basic level of a network interface today. Almost all modern NICs have some very basic offloads such as IPv4/ IPv6 and TCP/UDP checksum offloads, but Foundational NICs are designed to enable low-cost network ports forgoing many of the higher-end offload features that add to cost and complexity.

Indeed, Foundational NICs are still a very important piece of the industry, however, at higher speeds as data rates rise and processing data flow requires more compute, most of the NICs we see are more Offload NICs.

Offload NICs are generally found in families that support 100Gbps and faster networking. Some of these families also incorporate lower-speed ports but network adapters are often designed in generations, and the 100GbE generation created a clear need for a new level of offload. At those data rates, it becomes critically important to have the NIC handle networking functions in hardware and independent of the CPU. That includes some virtualization functions being offloaded to the NIC as well.

The goal of the Offload NIC is to free the CPU from network processing as much as possible so that more CPU resources are available for running applications. There may be some limited programmability, but to a lesser extent than SmartNICs.

Getting to the SmartNIC vs DPU discussion, the key innovation with SmartNICs over offload NICs is adding a more flexible programmable pipeline, which is something that DPUs incorporate as well. Given the confusion in the market and the fact that the “SmartNIC” term was used well before the “DPU” term was adopted by the industry, there is a lot of confusion. We looked over the traditional SmartNIC and DPU materials, and there became quite a clear change in the conceptual model. SmartNICs we are thus defining as NICs that have programmable pipelines to further enhance the offload capabilities from the host CPU.

In other words, although many may run Linux and have their own CPU cores, the function of a SmartNIC is to alleviate the burden from the host CPU as part of the overall server. In that role, SmartNICs differ from DPUs as DPUs seem to be more focused on being independent infrastructure endpoints.

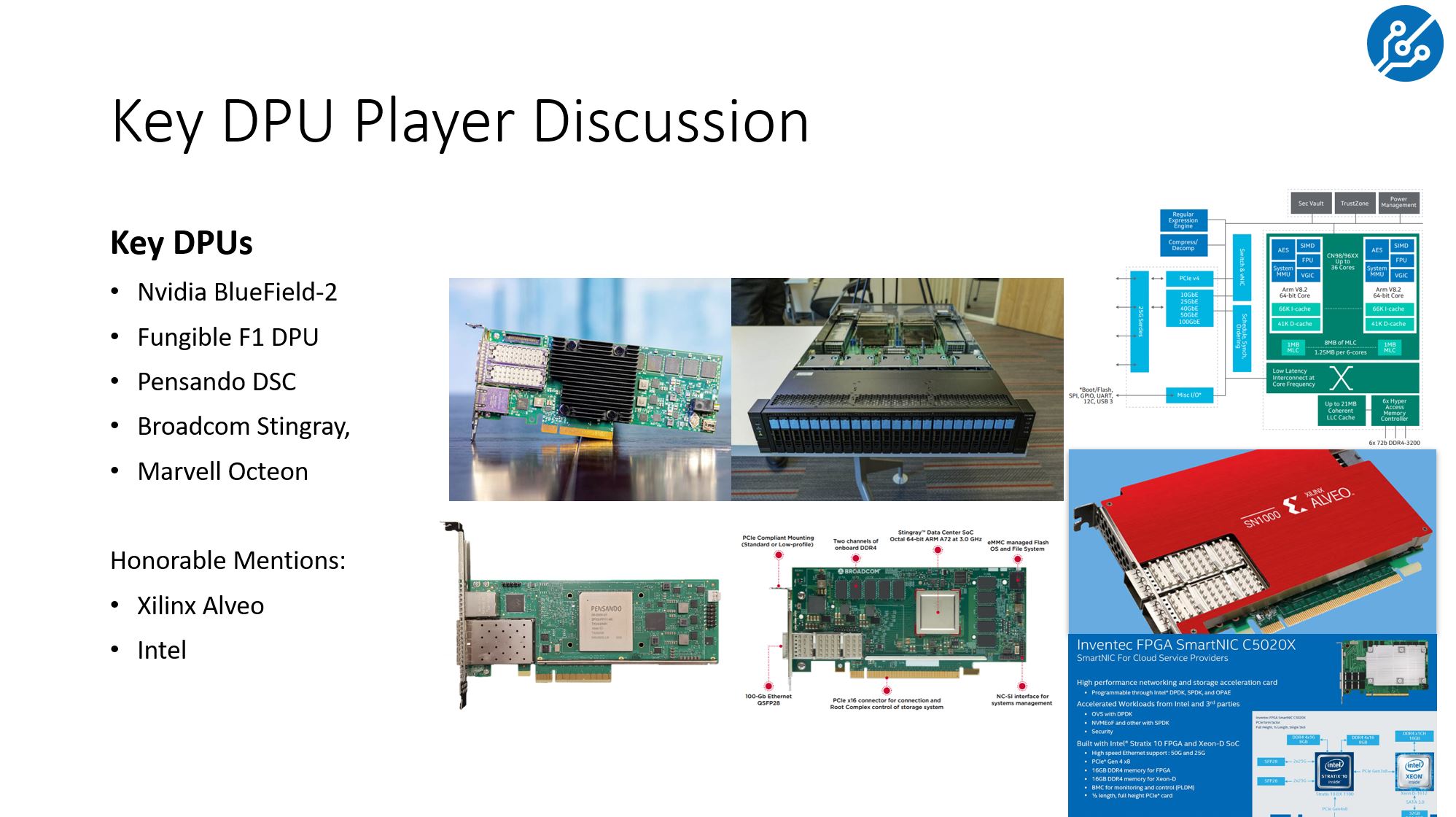

When we surveyed what is being called a “DPU” today, offload and programmability are certainly key capabilities. The big difference was that vendors are designing the DPU in the spirit of the AWS Nitro platform to be infrastructure endpoints. Those infrastructure endpoints may attach storage to the network directly (e.g. with the Fungible products) those endpoints may be a secure onramp to the network (e.g. with the Pensando DSC products/ Marvell Octeon products) or they may be more of general-purpose endpoints to deliver compute, network, and storage securely to and from the overall infrastructure.

This may seem like it is a nuanced approach, but when we looked at what is in the market, there is a clear focus on products designed to be higher-end offload (SmartNIC) and independent network endpoints delivering services (DPUs.) With that, some of the confusion comes from the higher-end products marketing themselves as SmartNICs or DPUs, but we think they should be their own category that we are calling “Exotic.”

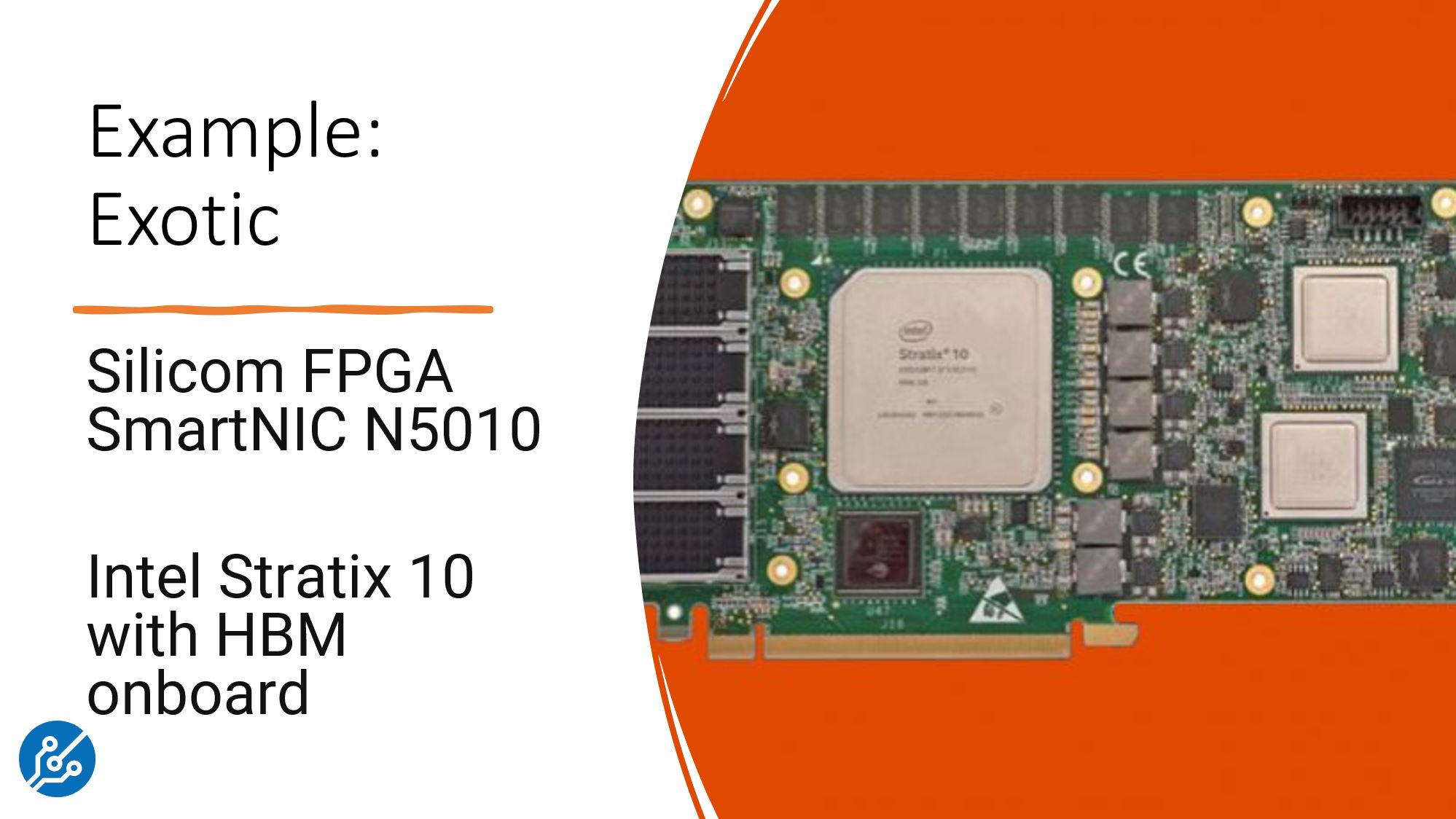

The category we are currently calling Exotic NICs are solutions that generally have enormous flexibility. Often, that flexibility is enabled by utilizing large FPGAs. With FPGAs, organizations can create their own custom pipelines for low latency networking and even applications such as AI inferencing being part of the solution without needing to utilize the host CPU.

Generally, though, there is a major difference between the SmartNIC/ DPU and the Exotic NIC. That flexibility and programmability mean that those organizations deploying Exotic NICs will have teams dedicated to extracting value from the NIC through programming new logic for the FPGA. With flexibility comes responsibility and that is why these solutions need to be categorized outside of the traditional SmartNIC and DPU categories. In many domains, solutions categorized as exotic can yield impressive results, but also carry additional design and maintenance considerations that make them attractive to high-end applications.

Final Words

The STH NIC Continuum is not perfect, but we need a way to categorize solutions so that we can evaluate and present them to our readers. In the SmartNIC vs DPU video above, we go into some of the key DPU players, and also some “honorable mentions” for FPGA solutions as a way to discuss the current state of the market. Within the DPU family, we see a lot of work is being done to optimize for specific use cases so we wanted to go into what some of the solutions are targeting as the market matures.

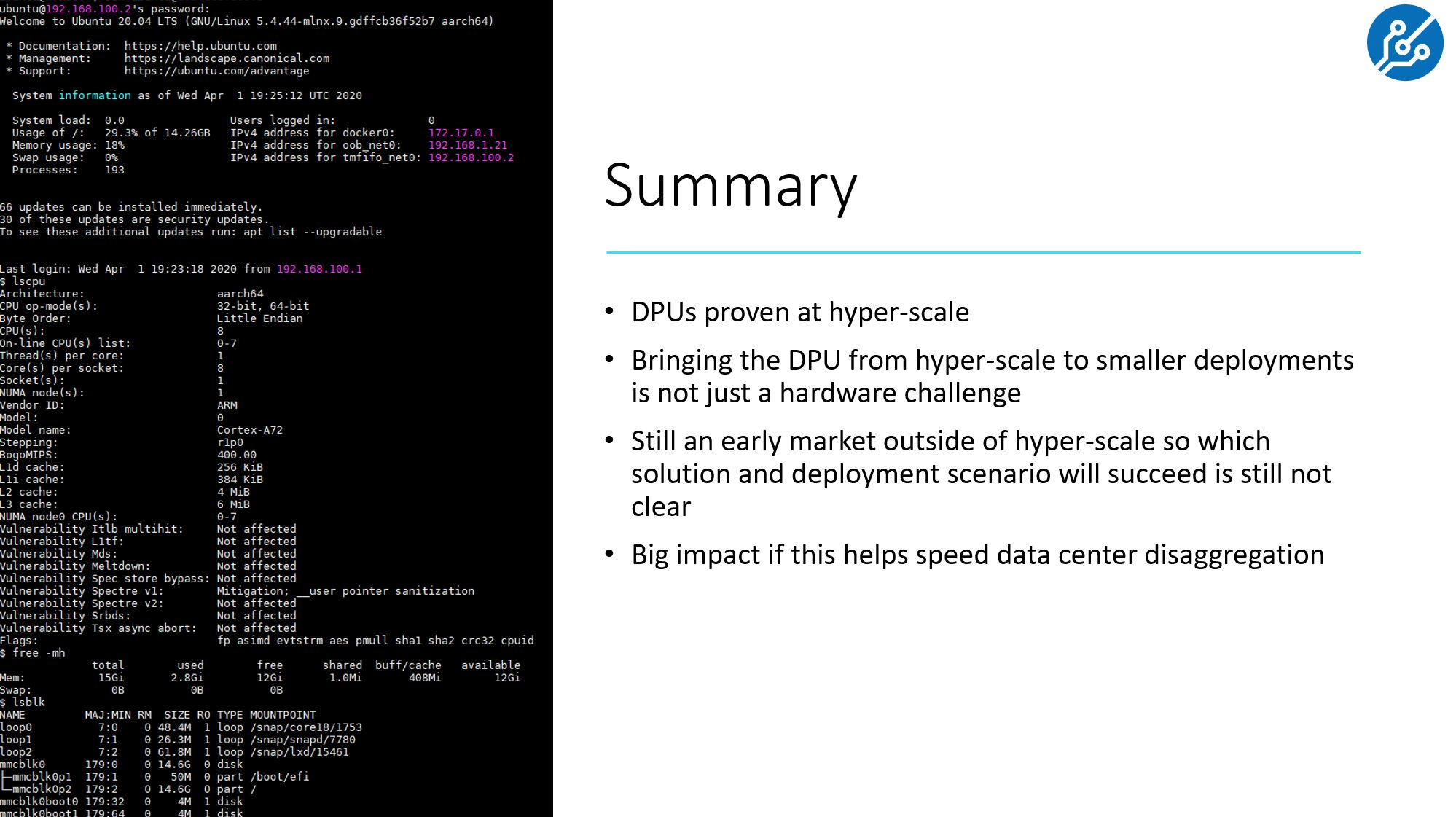

On STH, we are starting to do more DPU content, including simply A Quick Look at Logging Into a Mellanox NVIDIA BlueField-2 DPU. This is an emerging class of device and capabilities for the data center so we want to keep adding content to help our readers understand and evaluate offerings in this realm.

Of course, if you have feedback on the first draft of the 2021 STH NIC Continuum, feel free to leave that either here or in the comments on the video. The goal is to help our readers categorize and then evaluate SmartNIC vs DPU vs Exotic (FPGA) solutions and the industry is currently using terms without defining what they mean. Since we cover and review many of these solutions, we need to get some alignment, so we have the STH Continuum so that way if something is labeled a “SmartNIC” but it is really more of what we would consider an Exotic solution, we can demonstrate the differences.

Hi Patrick, thanks for this. Your bullet point description of a DPU helps characterise the thing.

However I am still left thinking that a DPU is just a computer in a highly proprietary format factor. And, worse, a highly proprietary format factor computer that needs to be plugged into a PCIe slot in a standard computer in order to be useful!

nVidia/Melanox clearly wants to make entire computers but doesn’t have an x86 license… this is the result.

Could DPUs present mounted file-systems to the server, removing the need for kernel modules or FUSE? Would there be any performance pro/cons to doing so?

Eh, I am still somewhat skeptical, looks quite niche. I am not sure if going beyond having a fast and robust offload and RDMA-over-Ethernet is really worth it when fast cores and PCIE 4 lanes are cheap with Epyc Rome.

Also these things look way too proprietary.

@Y0s

Fungible DPU can present a remote nvme device as local device.

@Modprobe

Most network innovation started as proprietary solutions. Once proved, the tech was opened up to expand the market.

Have you guys tried to implement any storage functions like raid,encryption,compression or dedupe on top of nvme. The x86 I/O penalty wipes out all advantage of nvme.

Advantage of remote disk appearing as local is that, your data is safe and available to mounted on a different server. It enables a whole different kind of servers and app architectures.

I am sure similar features with GPU, FPGA, RAM will start making apperance

@InfraGuy: Can you describe the “x86 I/O penalty” with more details?

The Fungible S1 DPU is a 200Gbps chip (with 16MPS cores and may other accelerators) meant to used in PCIe cards (2x100GE) working with the server main CPU.

The Funglible F1 DPU is a 800Gbps chip (with 52MIPs cores + accelerators) meant to build appliances, like the FS1600 storage node. The F1 is the main or only processor in the box. In the FS1600 example it delivers NVMe-oF from 24 SSDs to the network on 12x100GE ports with no additional NICs and accelerates storage functions like encryption, compression, etc.

emerth – you can actually use these DPUs without host systems. So for example, you can use the PCIe connector and connect NVMe SSDs and then use the DPU to connect to the network. Likewise, with the Fungible F1 DPU, there are two DPUs and no traditional x86/ Arm server.

@Jennes what he meant with x86 Io penalty is the overhead of the operating system when you use software raid, encryption, compression or other such operations.

The processing adds a lot of latency, cpu usage and in the end you are limited DDR4 memory bandwidth of your CPU.

Offloading engines for storage are critical if you want to not kill your NVMe performance.

@Patrick, thanks for explaining that. My mistake.

@InfraGuy – so currently I do NVMe over fabrics using late model Mellanox QDR IB cards and stock Linux 5.x kernel modules. These things can do that with stock Linux, or do I need 3rd party programming done?

Hi Patrick, I we’d had a chance to get into this discussion when we bumped into each other at the OCP Summit. Your idea of segmenting Ethernet adapters by compute capability and cost is interesting. I just have two challenges with it: (1) there is theoretical capabilities and complexity and practical. What I mean by that is a PCIe board with an FPGA and Ethernet port could be programmed by the end user to do exactly the same function as a PCIe board with an ASIC like the Netronome one. It would not make a lot of sense from a cost perspective but it is possible and we have seen that they often have the same end users. (2) Mellanox shipped the Bluefield branded Ethernet adapter with an Arm-SoC on it for many years branding it SmartNIC. The NVIDIA rebranding to a DPU seems like a purely marketing-driven attempt to differentiate from Netronome. I think it is possible to have a more capable device and less capable device of the same type, just as not all GPUs have the same number of cores and features. We don’t always need to come up with new ways to categorize them. At Omdia we’ve used the term programmable NIC for a long time and I am actively considering different ways to taxonomize the marketplace but I am myself finding it difficult to contradict us that programmability is the key feature and the common feature across what you call smartNICs, DPUs and exotics.

Hi Vlad. Netronome I think is not excelling in the market. My sense is that Netronome is not a top 5 NVIDIA competitor in the DPU space. On the programmability side, all of these can be considered “programmable”, even those with ASICs with P4 and P4-like models. While one can program a FPGA, that is a different level of integration and effort versus a DPU, but also can yield fully customizable pipelines. That is why it needs to be in a different segment. You are correct that this continuum is spanning both technical and market/ implementation aspects. Happy to sit down and go through them in more detail since I know several analysts have been using this model recently.

Hi Patrick,

Very Nice blog! I’d like to share your blog and one of the pictures to my coworker. Is there any copy right concern?

Thanks,